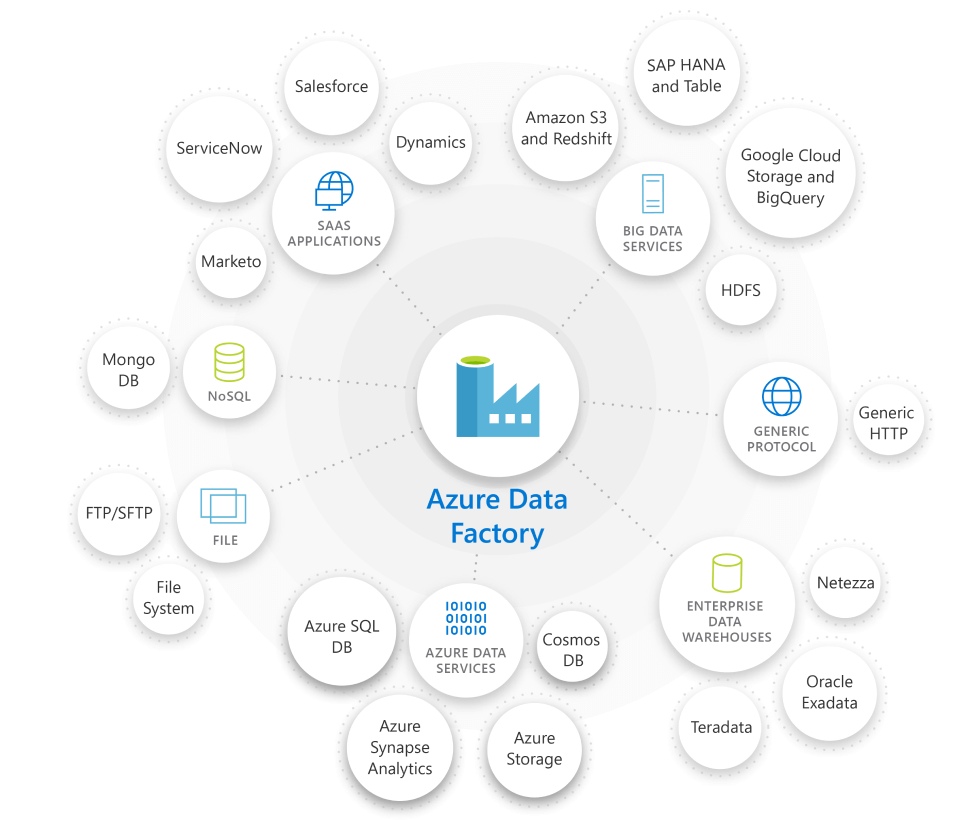

Let us take a simple example where we are moving contact records (.CSV) stored in Azure File Share to Dataverse or Dynamics 365 (UPSERT).

CSV file has 50000 sample contact records (generated using https://extendsclass.com/csv-generator.html) stored in Azure File Storage.

Another option of generating sample data

https://nishantrana.me/2020/05/26/using-data-spawner-component-ssis-to-generate-sample-data-in-dynamics-365/

The Source in our Data Factory pipeline.

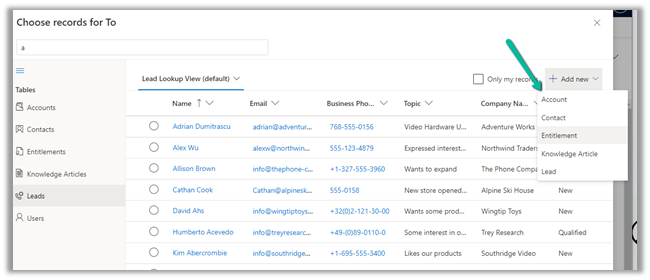

The Sink is our Dynamics 365 / Dataverse sandbox environment, here we are using the Upsert write behavior.

For the Sink, the default Write batch size is 10.

Max concurrent connections specify the upper limit of concurrent connections that can be specified.

Below is our Mapping configuration

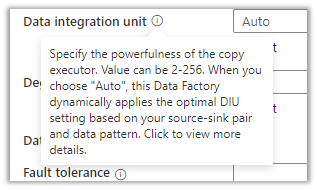

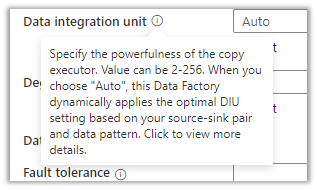

The Settings tab for the pipeline, allows us to specify,

Data Integration Unit specifies is the powerfulness of the copy execution.

Degree of copy parallelism specifies the parallel thread to be used.

Let us run the pipeline with the default values.

- Write Batch Size (Sink) – 10

- Degree of copy parallelism – 10

- Data integration unit – Auto (4)

The results à It took around 58 minutes to create 50K contact records.

We then ran the pipeline few more times by specifying the different batch sizes and degree of copy parallelism.

We kept Max concurrent connections as blank and Data Integration Unit as Auto. (during our testing even if we are setting it to higher values, the used DIUs value as always 4)

Below are the results we got à

| Write Batch Size |

Degree of copy parallelism |

Data Integration Unit (Auto) |

Total Time (Minutes) |

| 100 |

8 |

4 |

35 |

| 100 |

16 |

4 |

29 |

| 1000 |

32 |

4 |

35 |

| |

|

|

|

| 250 |

8 |

4 |

35 |

| 250 |

16 |

4 |

25 |

| 250 |

32 |

4 |

55 |

| |

|

|

|

| 500 |

8 |

4 |

38 |

| 500 |

16 |

4 |

29 |

| 500 |

32 |

4 |

28 |

| |

|

|

|

| 750 |

8 |

4 |

37 |

| 750 |

16 |

4 |

25 |

| 750 |

32 |

4 |

17 |

| |

|

|

|

| 999 |

8 |

4 |

36 |

| 999 |

16 |

4 |

30 |

| 999 |

32 |

4 |

20 |

The results show that increasing the batch size and degree of copy parallelism improves the performance in our scenario.

Ideally, we should run a few tests with different combinations before settling for a specific configuration as it could vary.

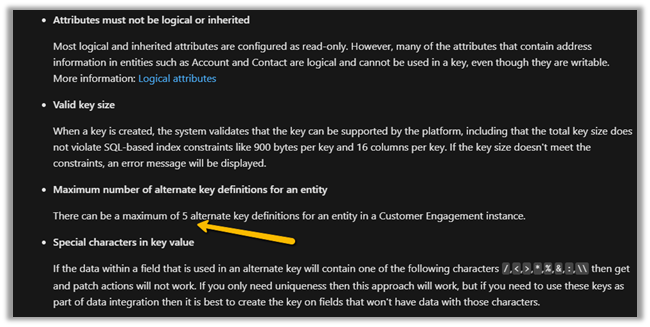

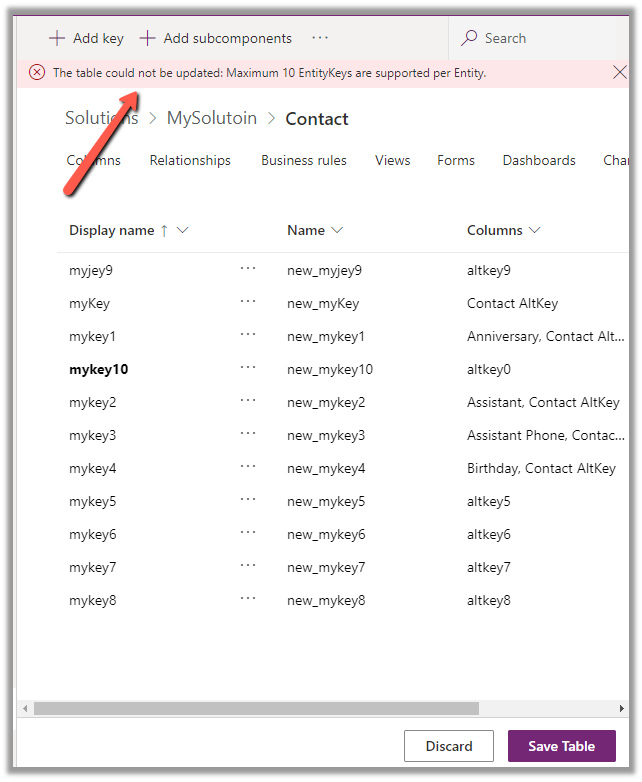

On trying to set the batch size to more than 1000,

We would get the below error à

ExecuteMultiple Request batch size exeeds the maximum batch size allowed.

Also refer –

Optimizing Data Migration – https://community.dynamics.com/crm/b/crminthefield/posts/optimizing-data-migration-integration-with-power-platform

Using Data Factory with Dynamics 365 – https://nishantrana.me/2020/10/21/posts-on-azure-data-factory/

Optimum batch size with SSIS –https://nishantrana.me/2018/06/04/optimum-batch-size-while-using-ssis-integration-toolkit-for-microsoft-dynamics-365/

Hope it helps..