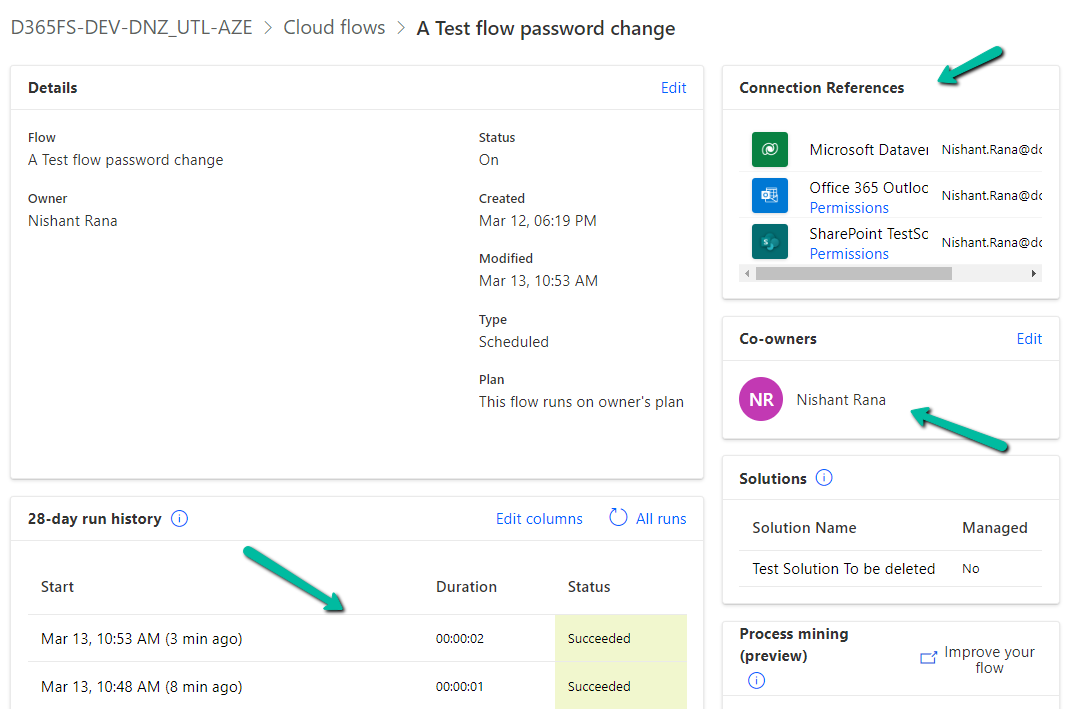

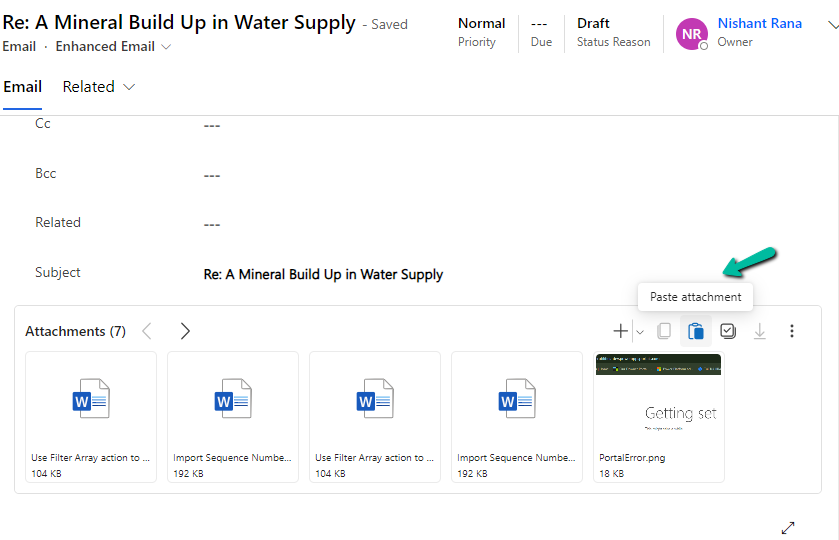

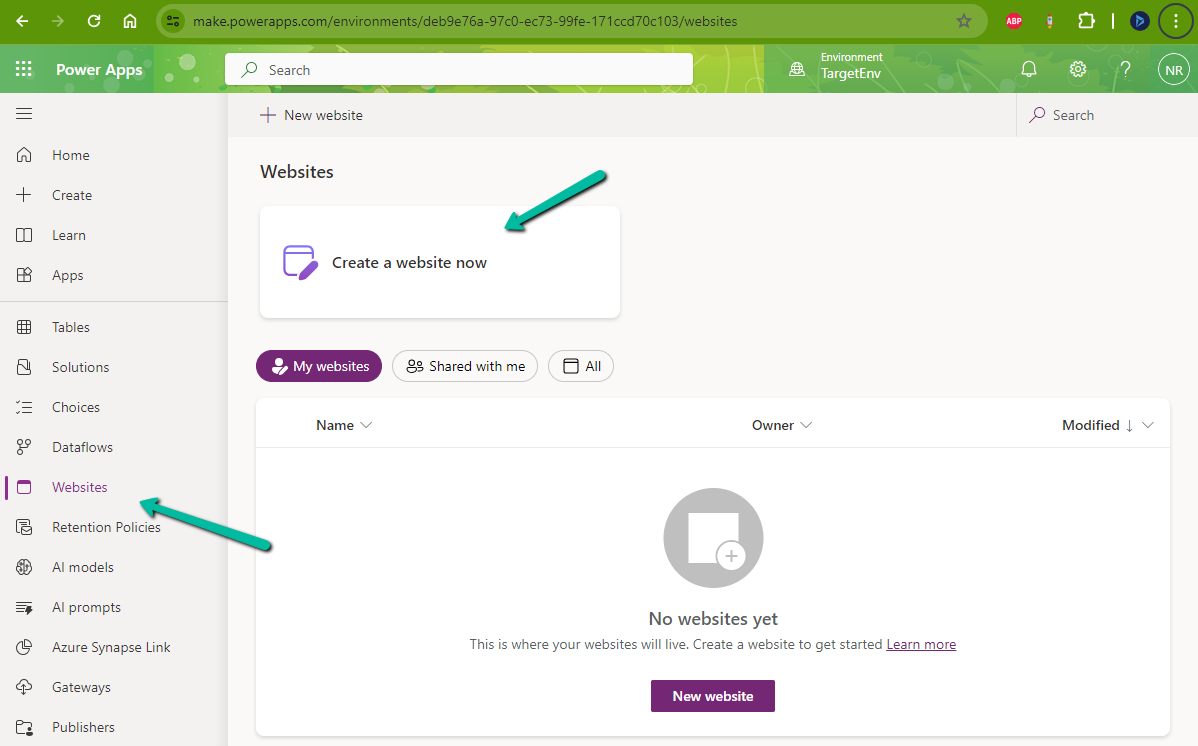

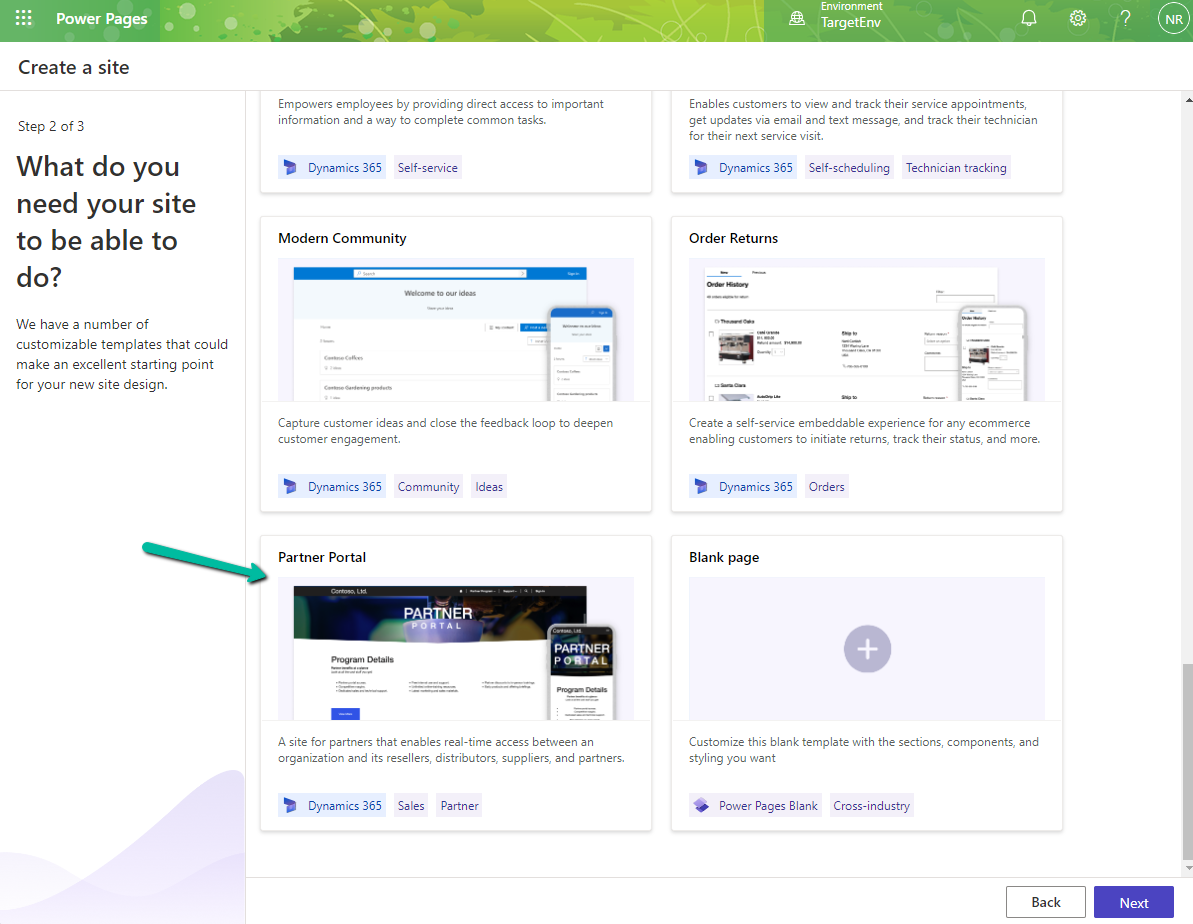

While trying to create a Website with Template – Partner Portal,

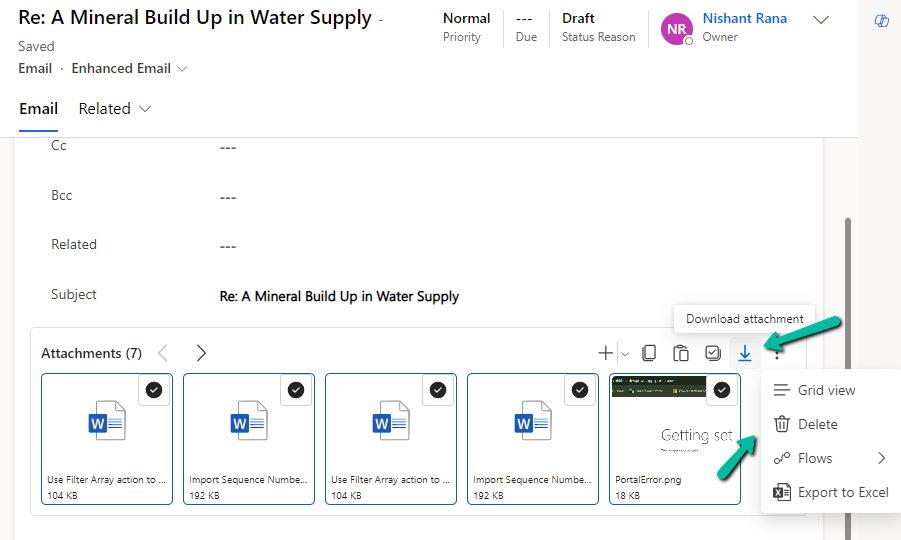

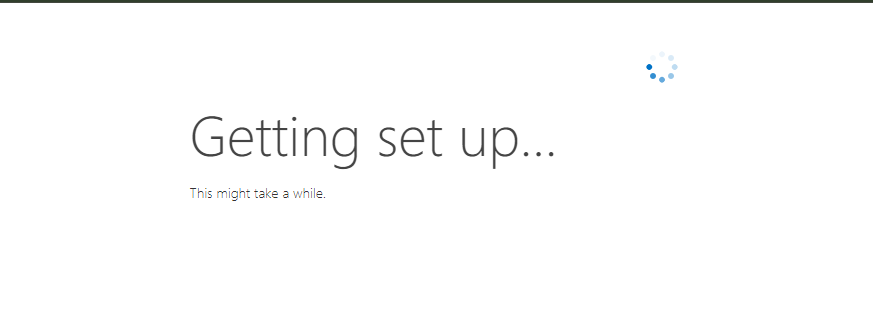

we observed the provisioning stuck at – Getting set up…

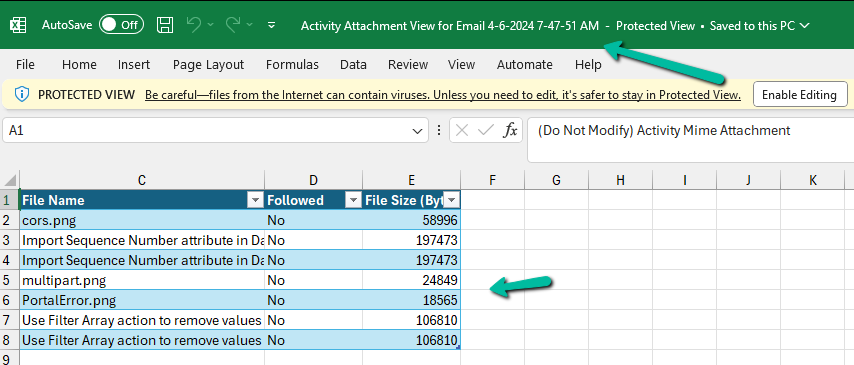

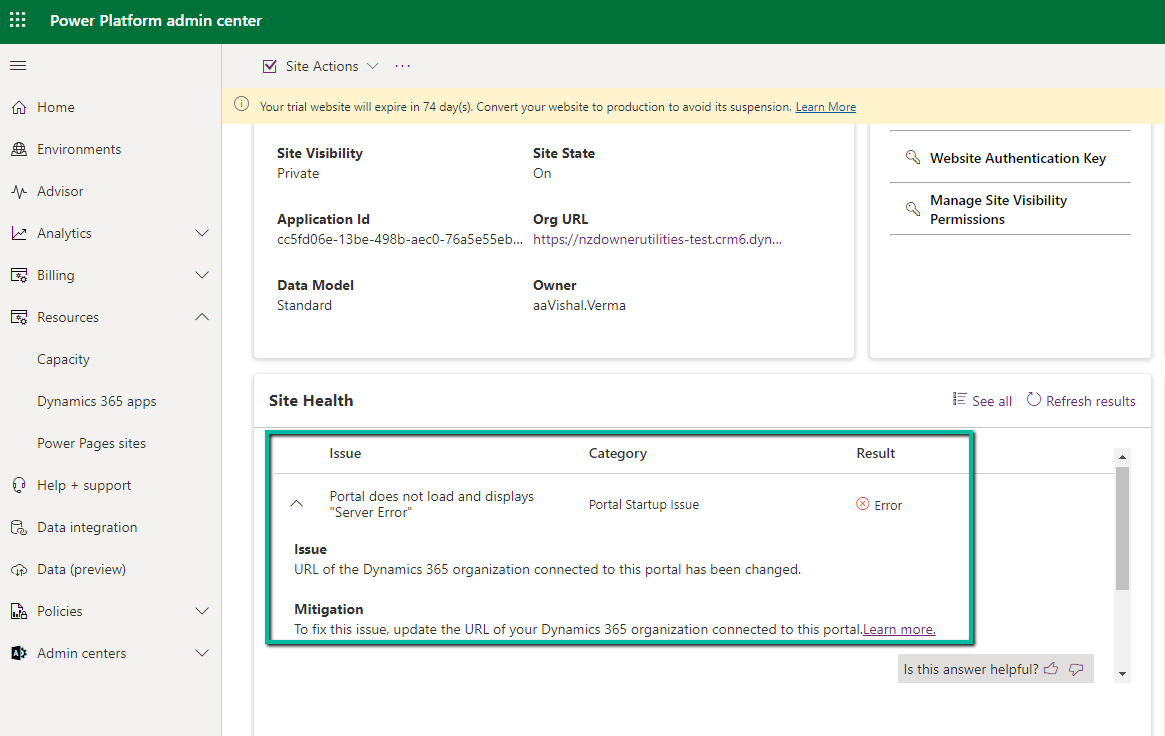

Also, it was showing the below error on checking the Site Health.

“URL of the Dynamics 365 organization connected to this portal has been changed.” Which wasn’t the case as the URL of the organization was correct.

Usually, the 1st Web Site takes time around an hour and then any new website is provisioned within 15-20 minutes (as the common / base solutions are already installed). This was the first website for that environment, however, when it was more than 24 hours, we then raised a Microsoft Support Ticket for it.

Microsoft acknowledged this as a bug and also got the fix ready, but there was a delay in deploying it because of dependencies internally.

In parallel, they also provided us with a workaround, which fixed the issue for us.

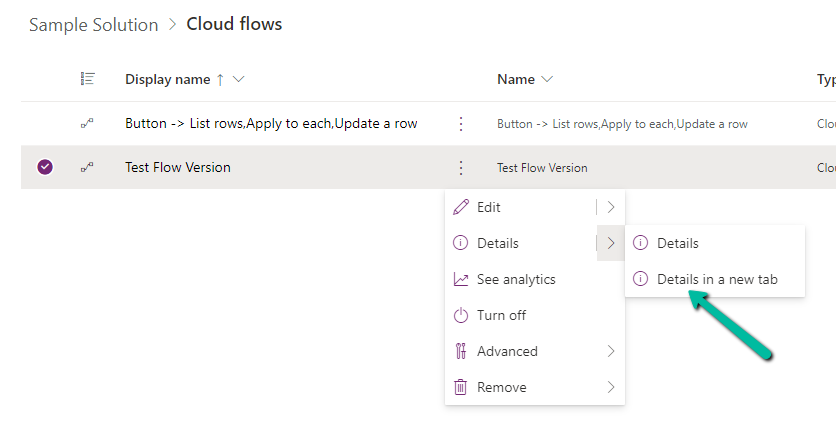

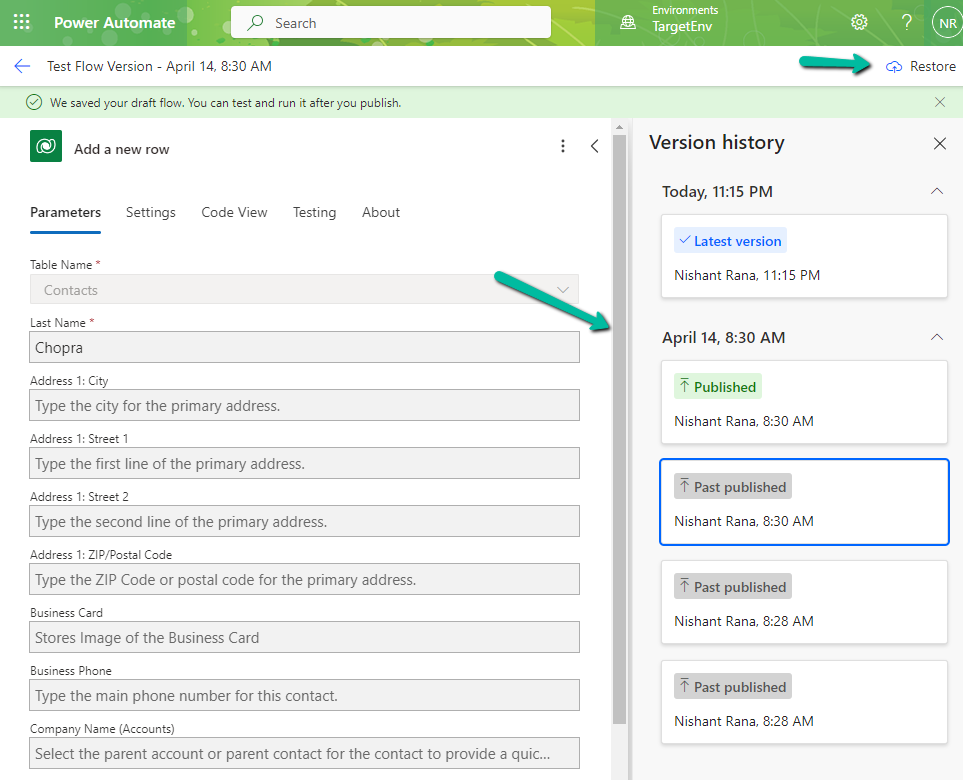

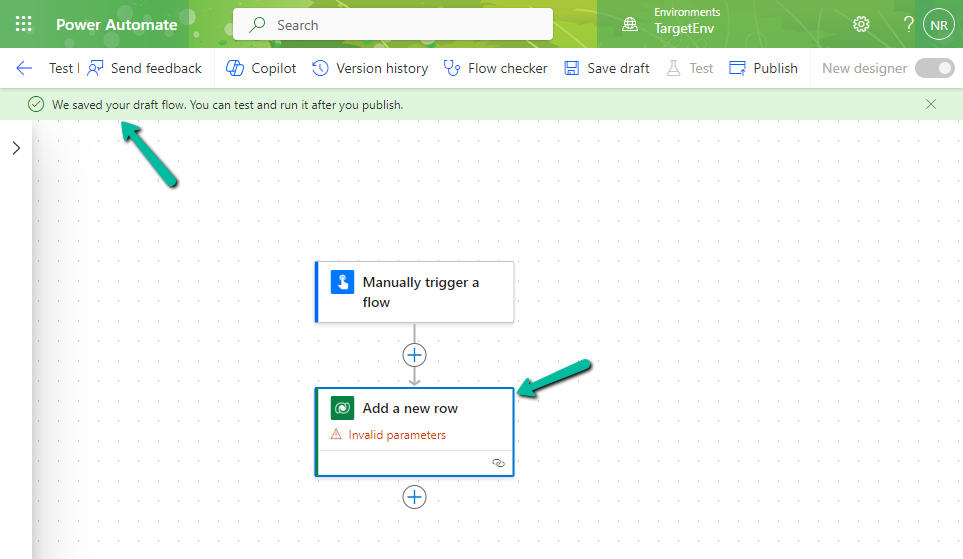

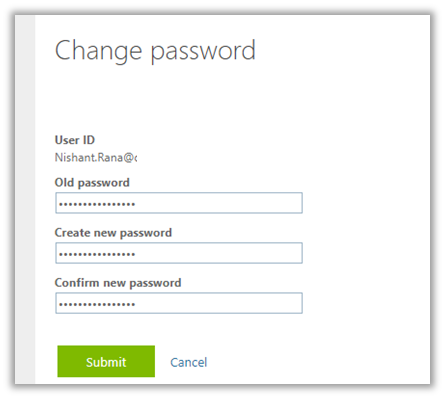

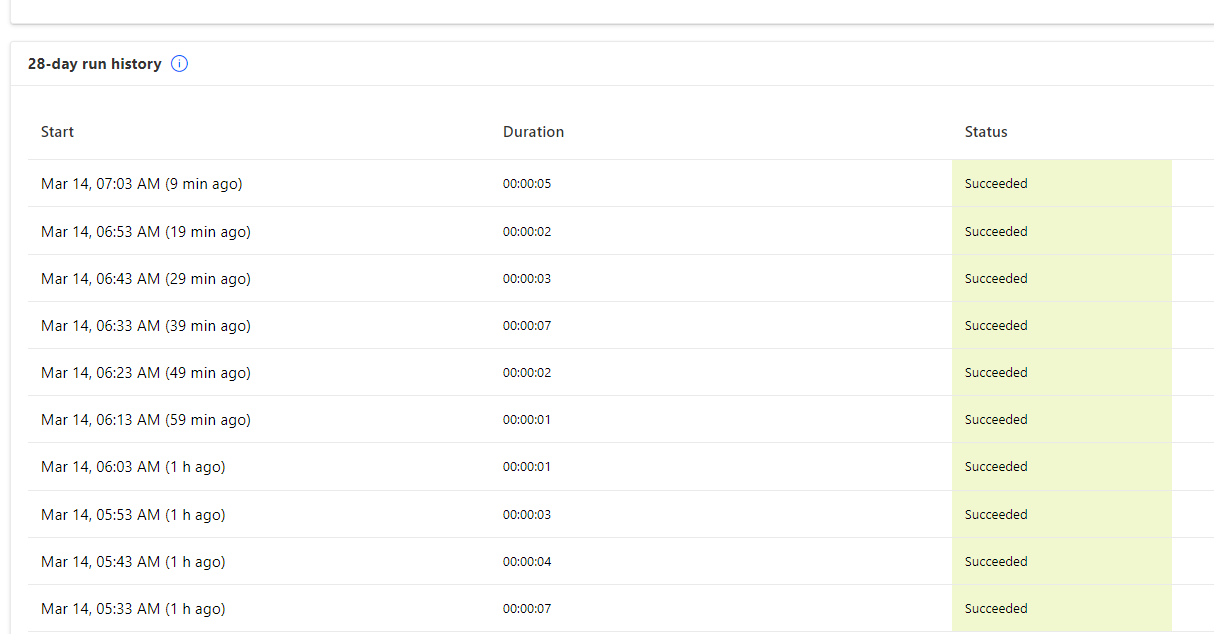

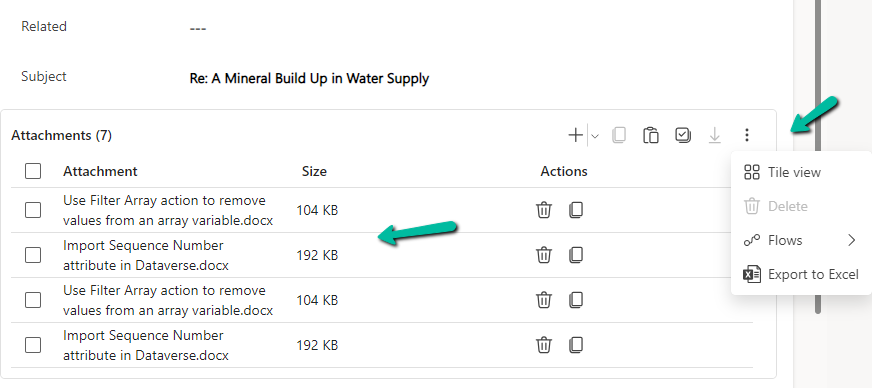

The steps are as follows –

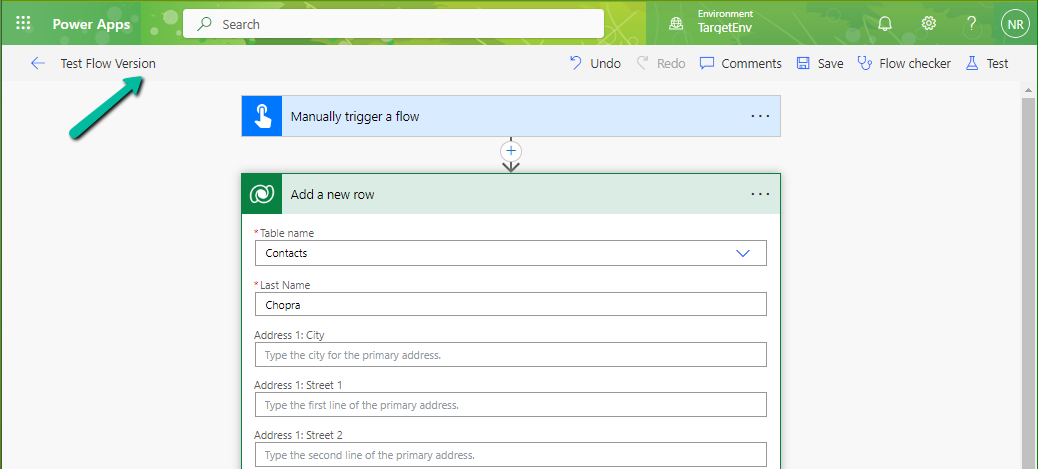

- Create Web Site with the Customer Self-Service Portal template.

- Create Web Site with the Partner Portal template.

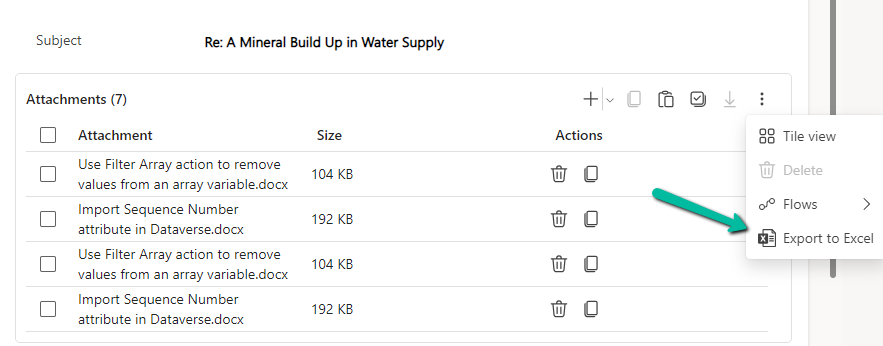

If Customer Self-Service Portal is not needed, the website can be deleted then, followed by deleting the below Managed solutions specific to Customer Self-Service.

- CustomerPortal (Dynamics 365 Portals – Customer Self-Service Portal)

- MicrosoftPortalAutomate (Dynamics 365 Portals – Automate)

- MicrosoftPortalEnhancedDMMigration (Dynamics 365 Portals – Enhanced DM Migration)

- PortalSitewide_RPServiceApp (PortalSitewideRPServiceApp)

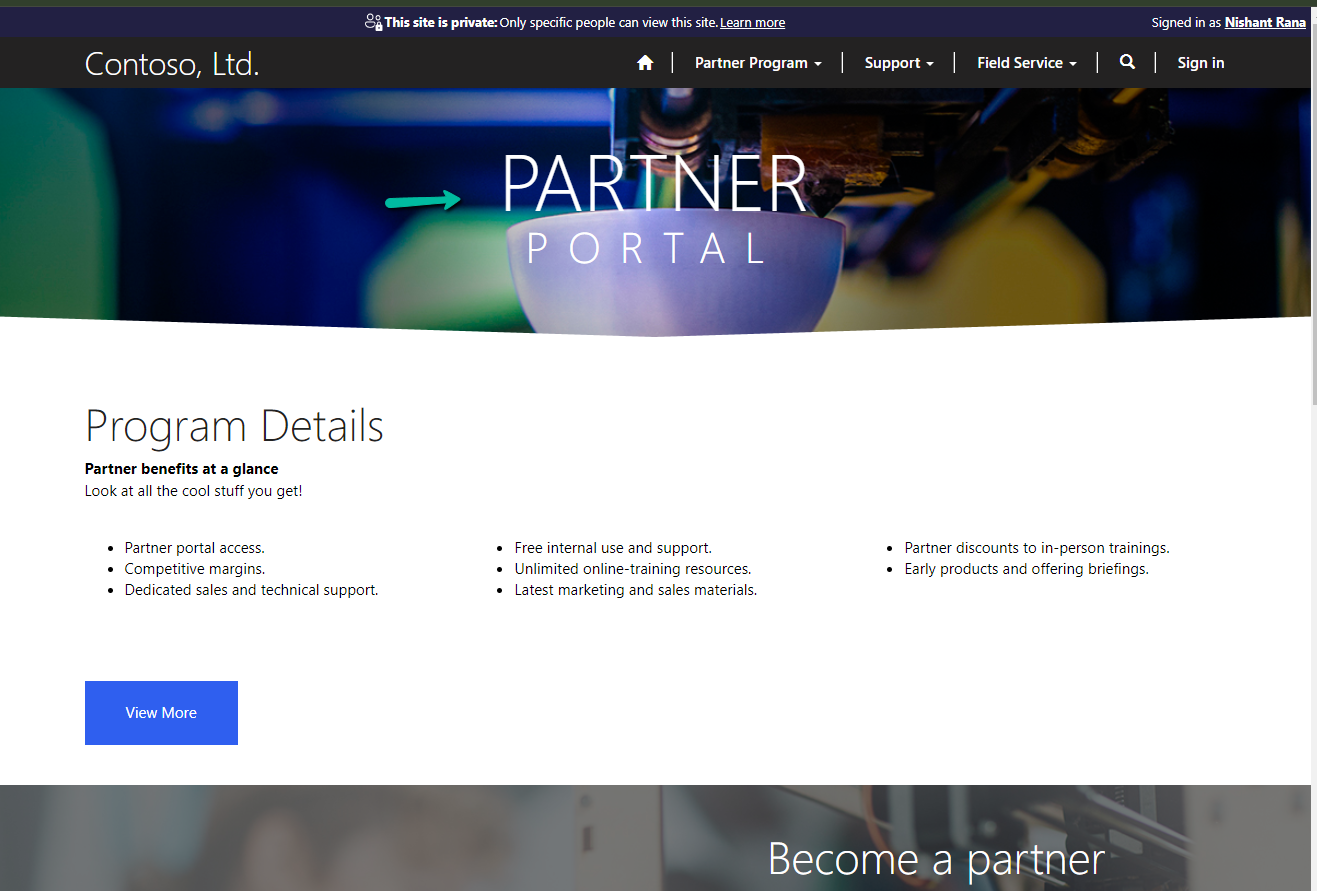

We followed the above steps and got the Website with the Partner Portal template created successfully.

Hope it helps..