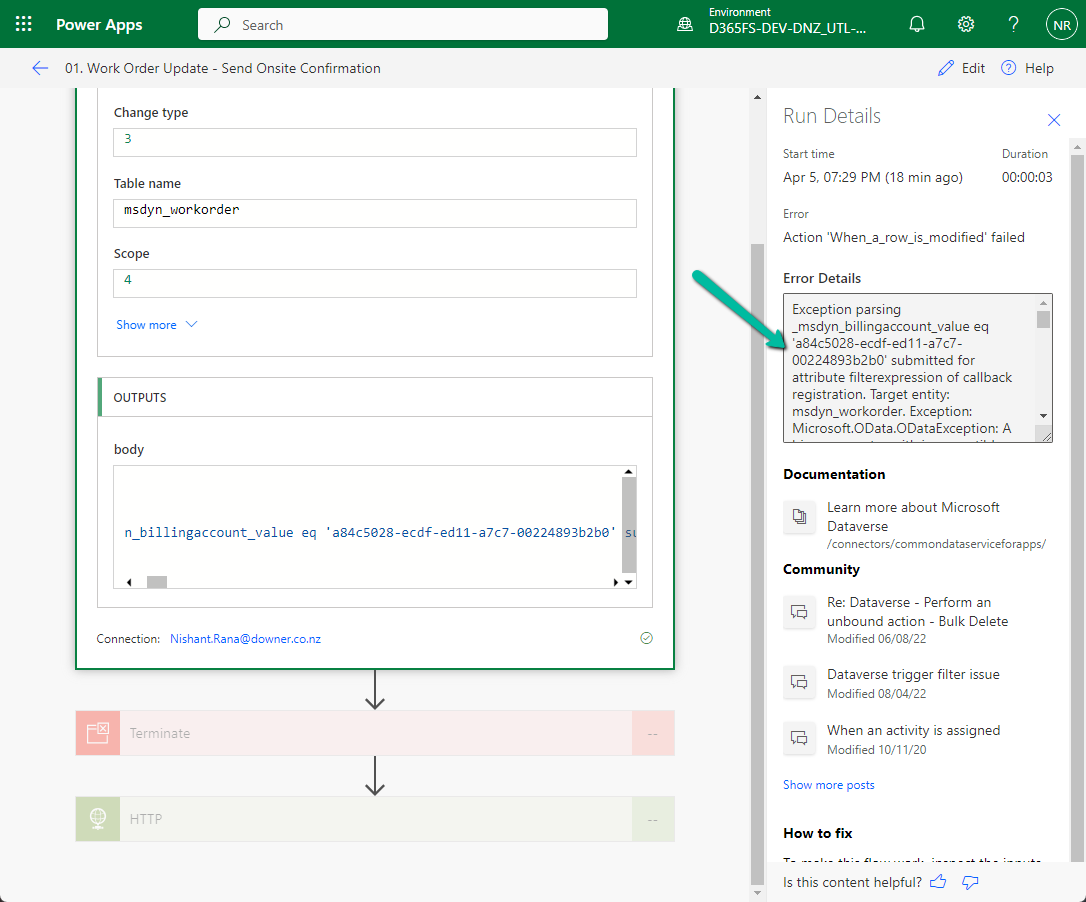

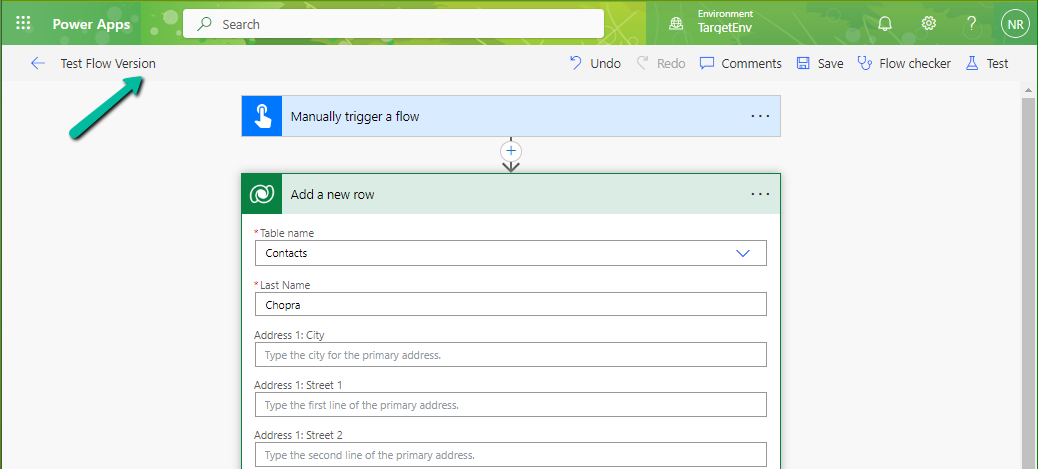

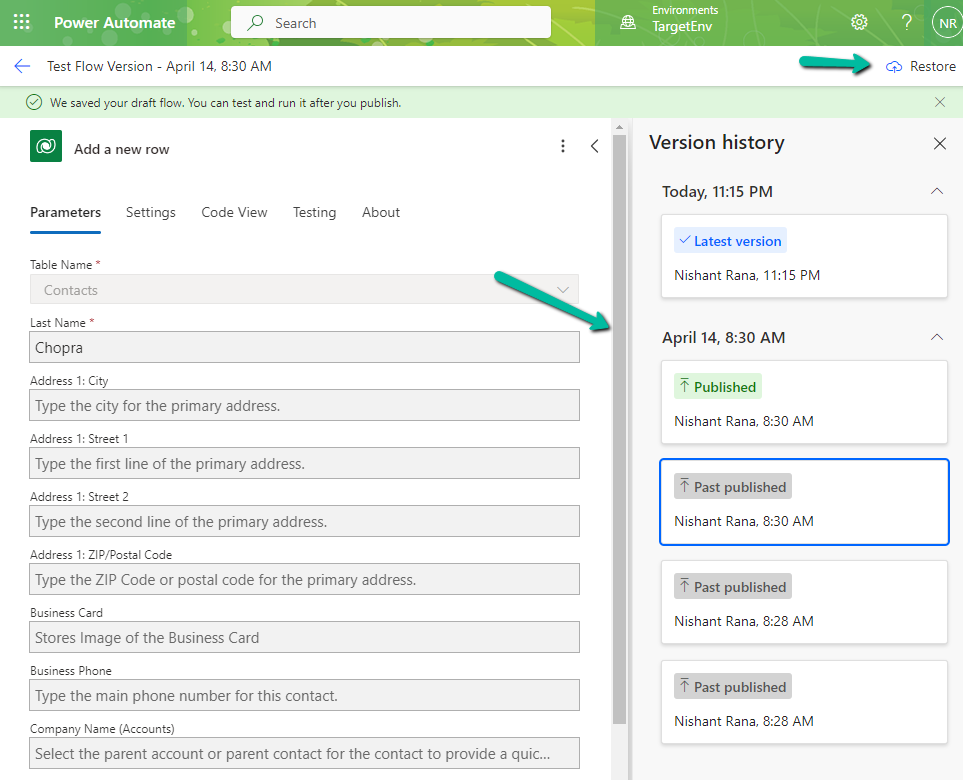

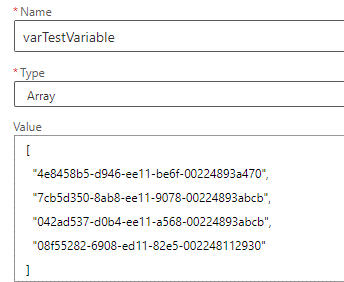

Suppose we have below array variable – varTestVariable having a list of GUIDs in it.

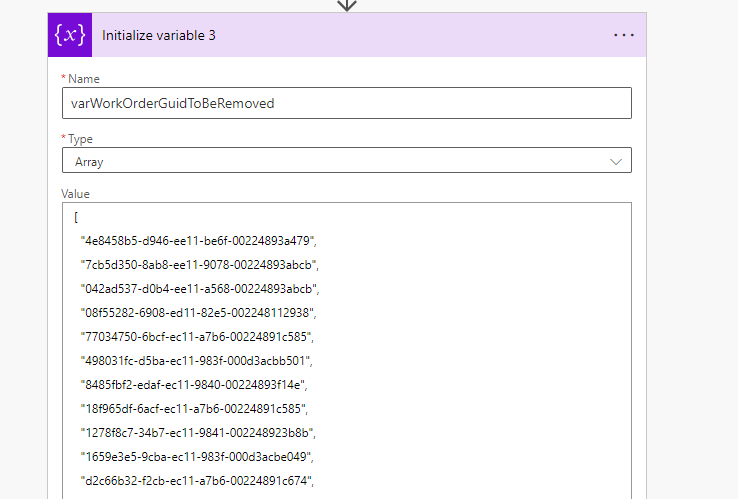

And we have another array variable varWorkOrderGuidToBeRemoved which has the list of GUIDs we want to remove from our first variable varTestVariable

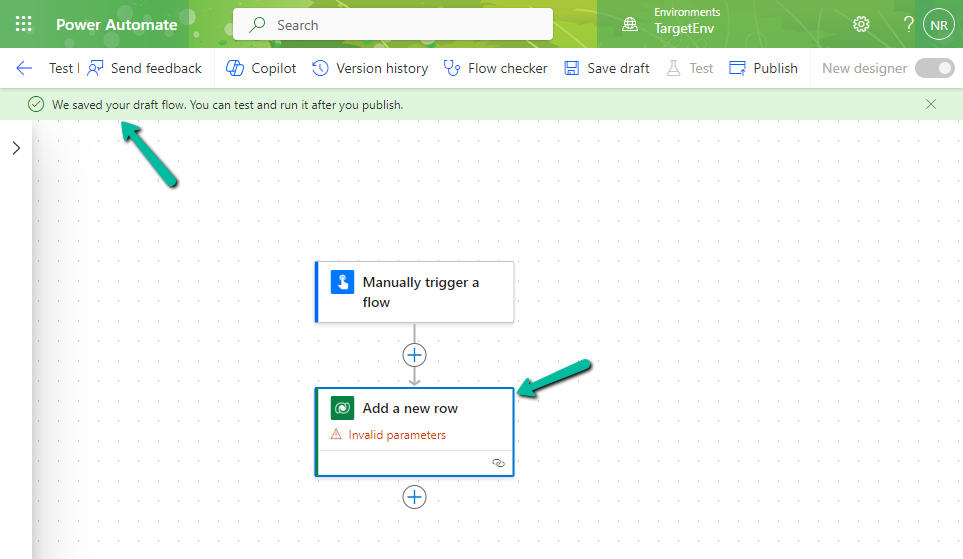

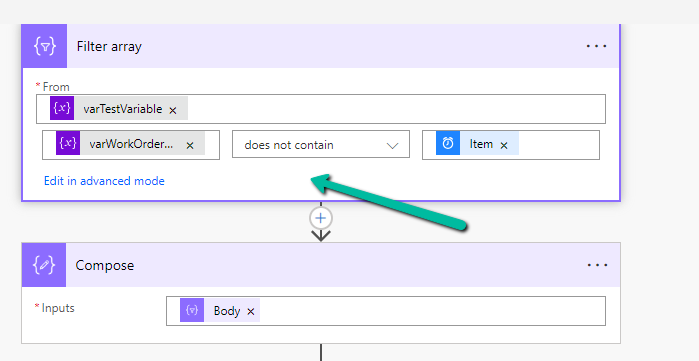

For this, we can make use of the Filter array action

In From, first we have specified the array variable from which we want to remove the values, followed by the variable that holds the values to be removed. Next, we have specified the “does not contain” operator and finally specified the item()

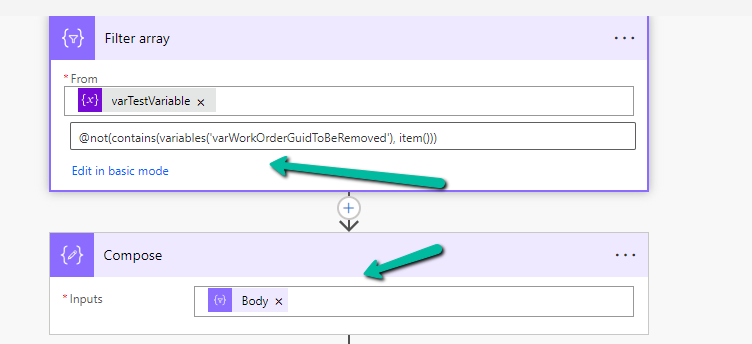

We can also click on Edit in advanced mode to see or edit the expression.

We can use the Body of the Filter array action that will hold the result in subsequent actions.

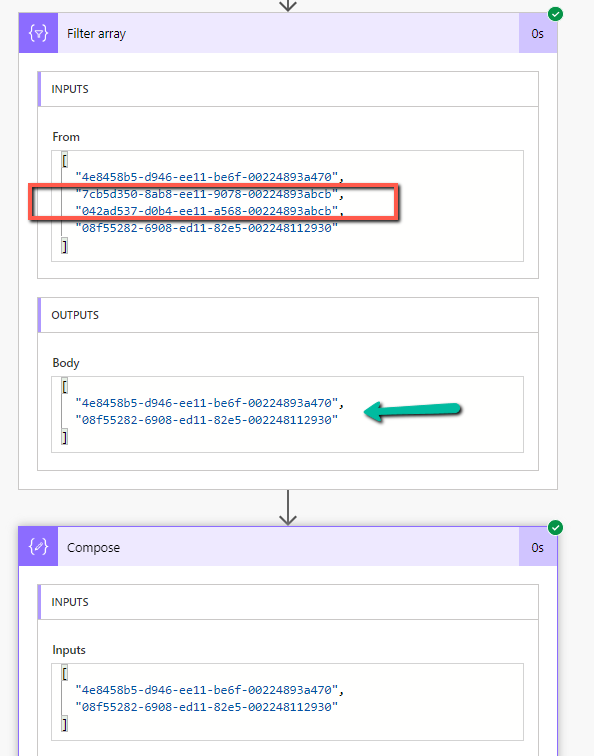

On running the flow, we can see that the values that existed in the second array variable were removed from the first array variable.

Get more details – https://www.damobird365.com/efficien-union-except-and-intersect-great-method/

Hope it helps..