In the previous post, we saw how to deploy and run SSIS packages on the cloud.

Here we take it one step further and will deploy and run the SSIS packages that use KingswaySoft’ s SSIS Integration Toolkit components.

Here we will need an Azure Subscription, where we will host the SSISDB, followed by provisioning Azure-SSIS Integration runtime instance.

We will also need the Azure Blob Storage account along with Azure Storage Explorer to upload the installation files of the SSIS Integration Toolkit.

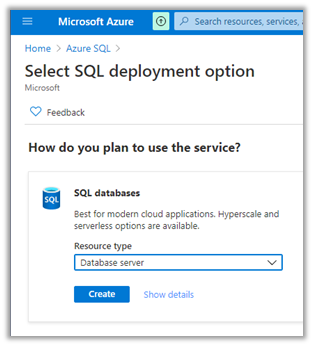

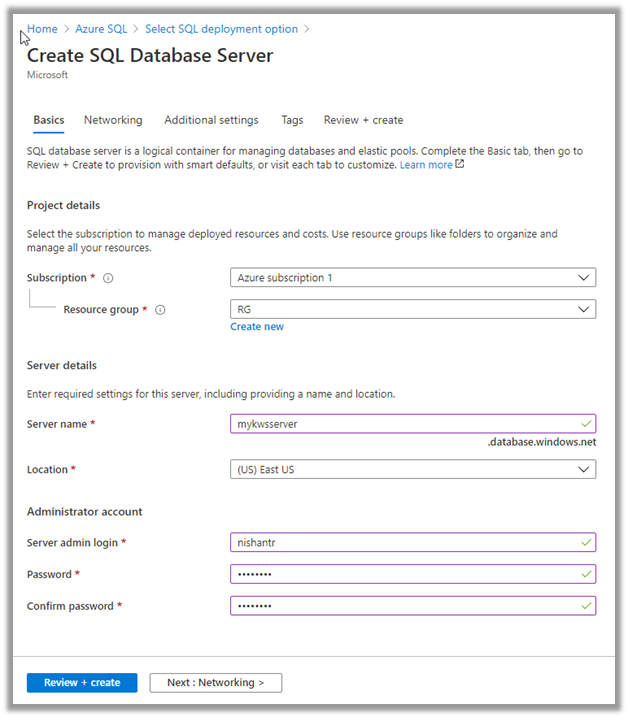

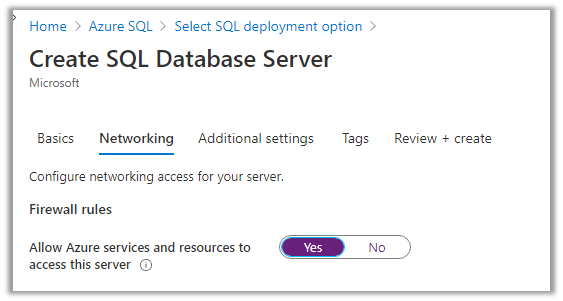

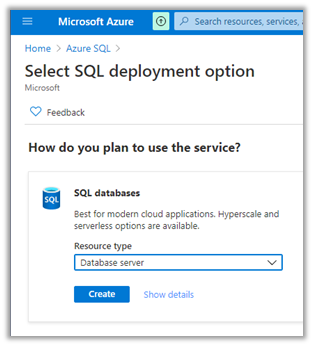

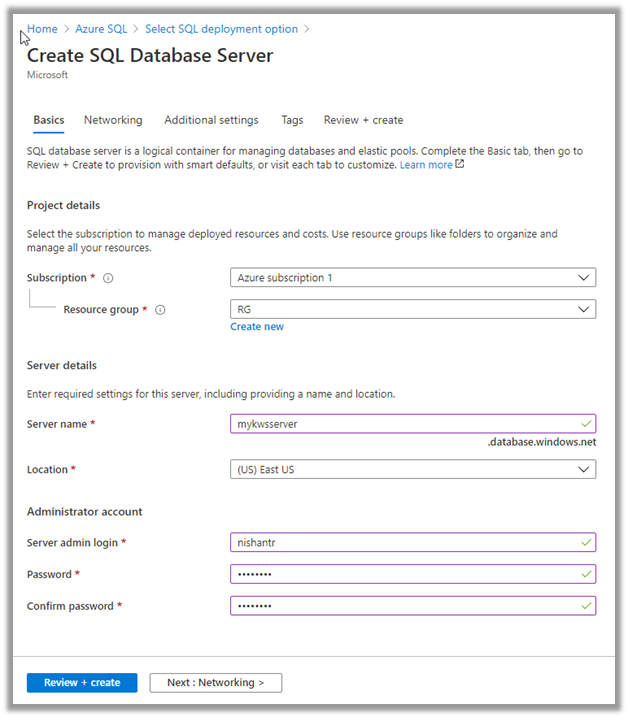

Let us first start by creating an Azure SQL Server instance.

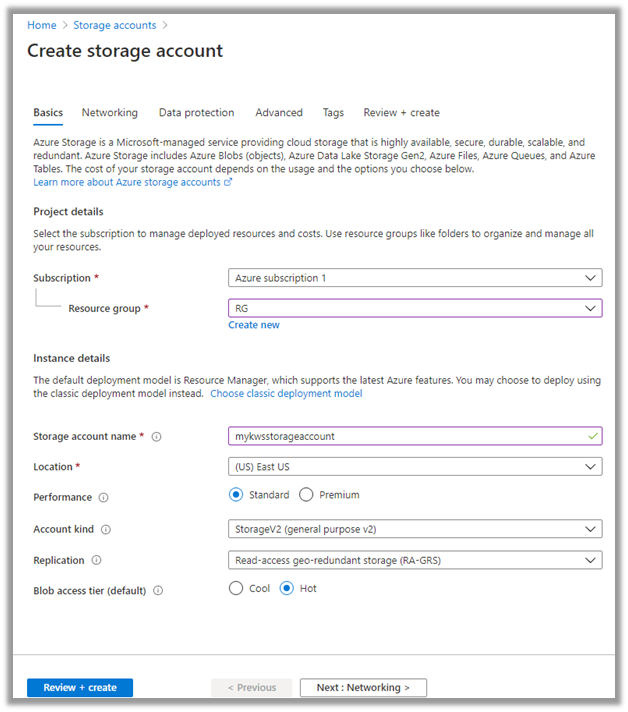

We have specified the below details.

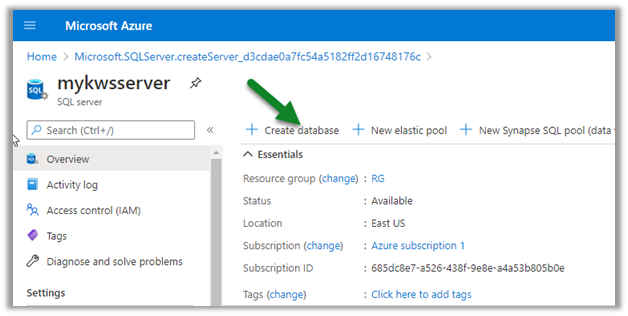

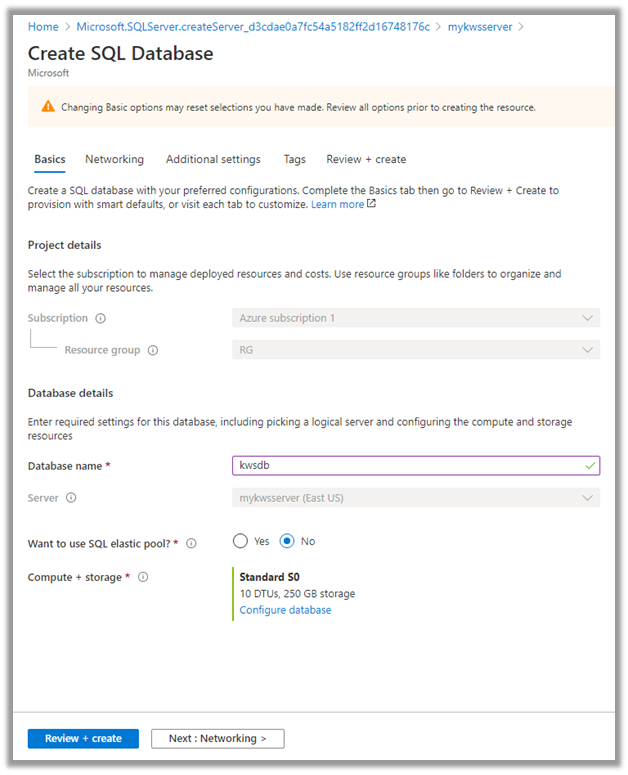

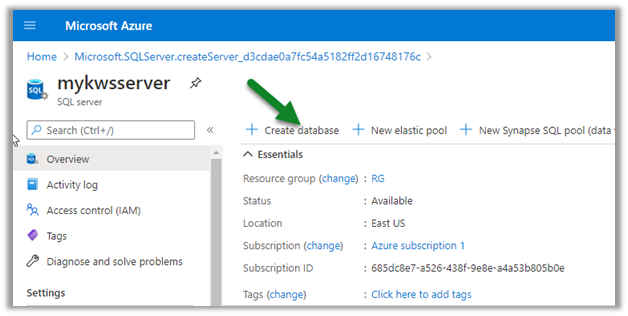

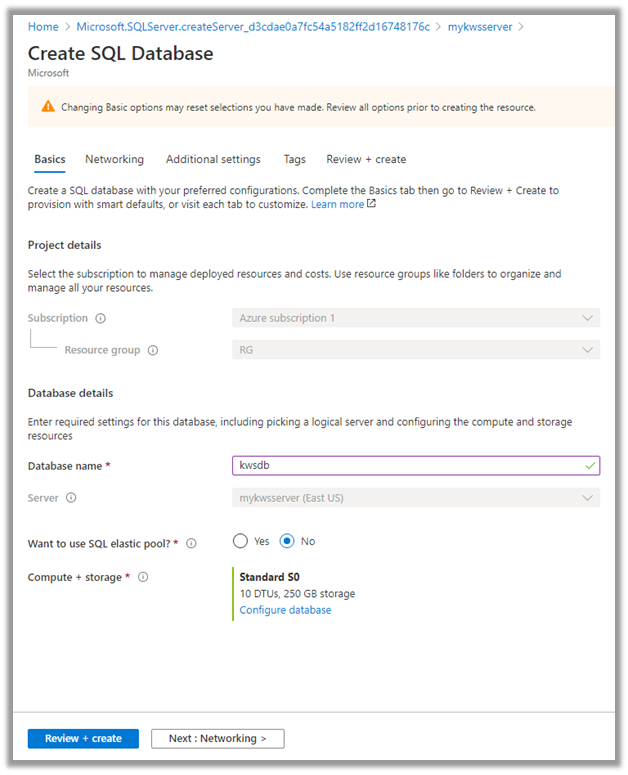

Now next create the database inside the server.

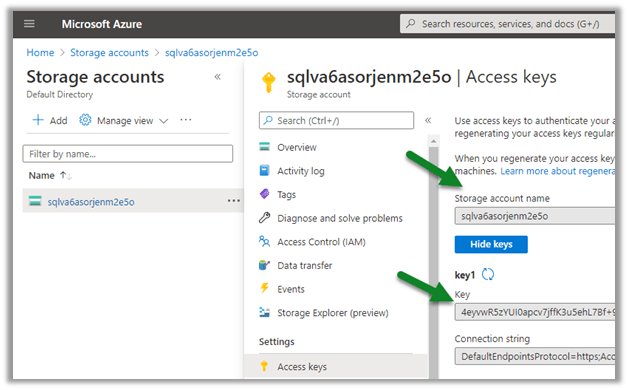

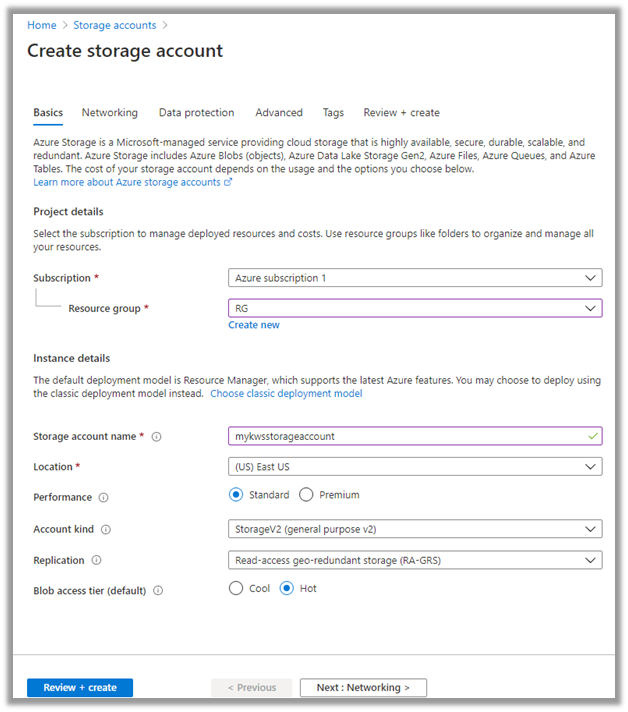

Now with Azure SQL Server and Database created, the next step is to create the Storage account.

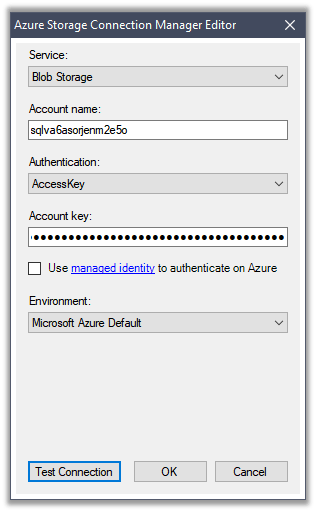

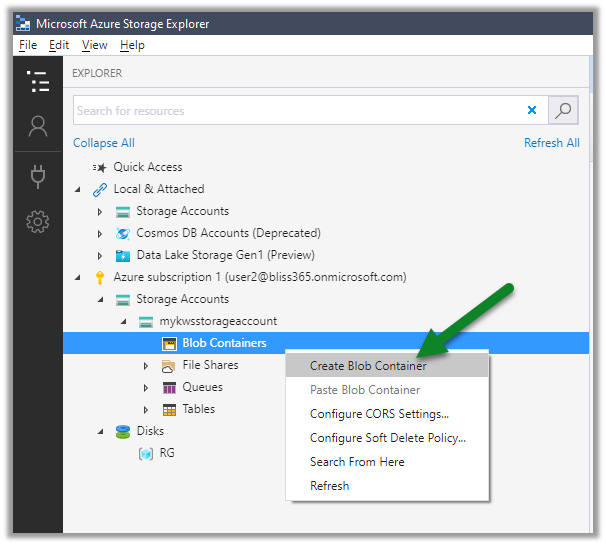

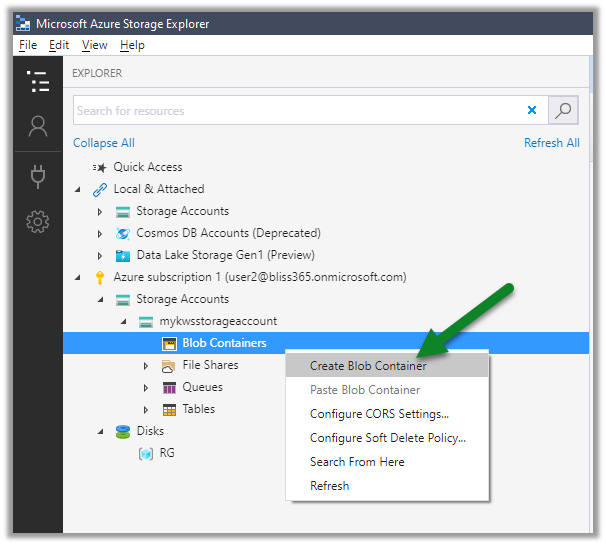

With the Azure Storage created, now let us connect to Azure using the Azure Storage Explorer.

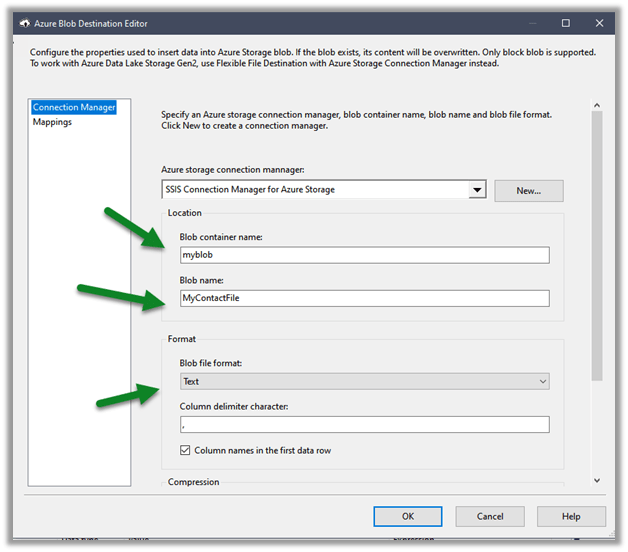

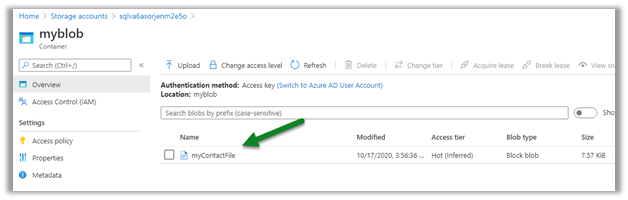

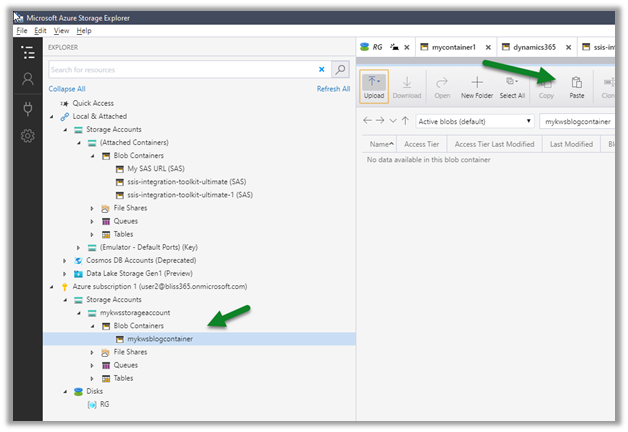

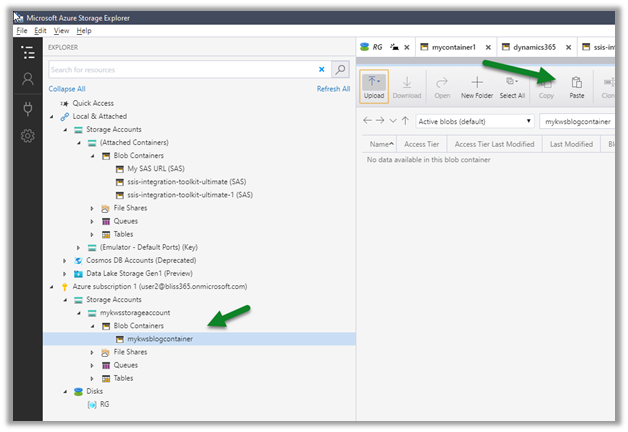

Create a new blob container in the Azure Storage account created.

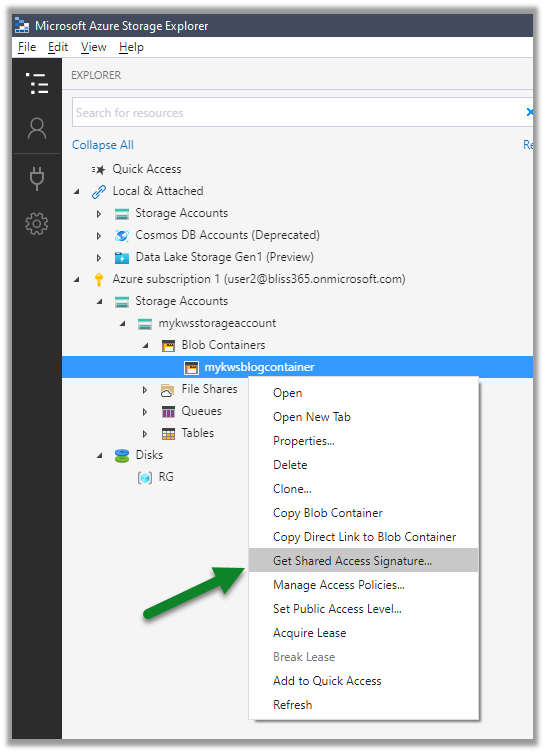

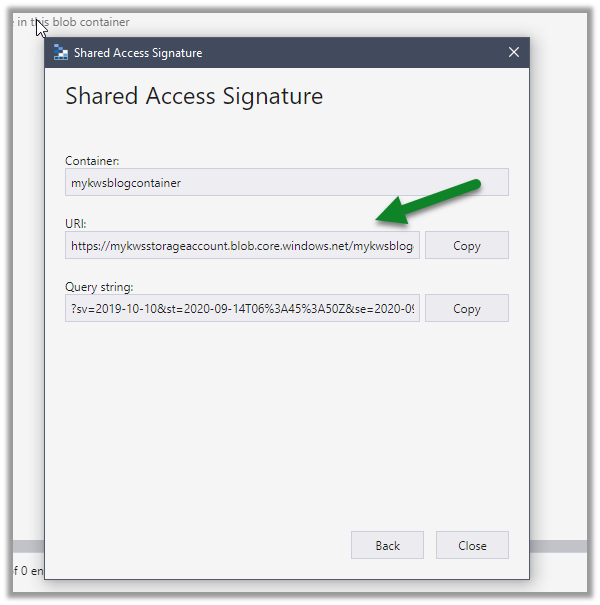

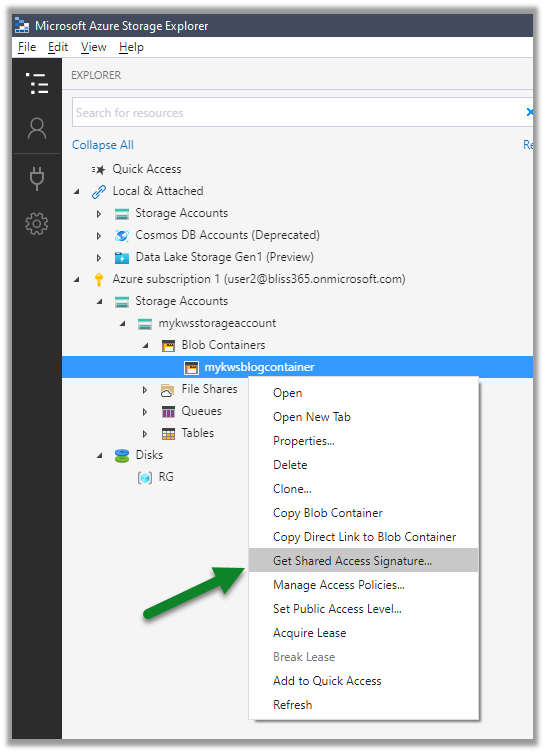

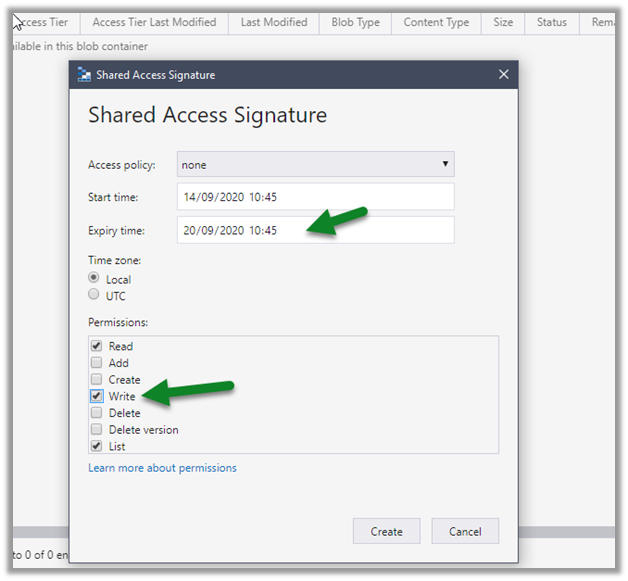

For the blob container created, right-click and select Get Shared Access Signature

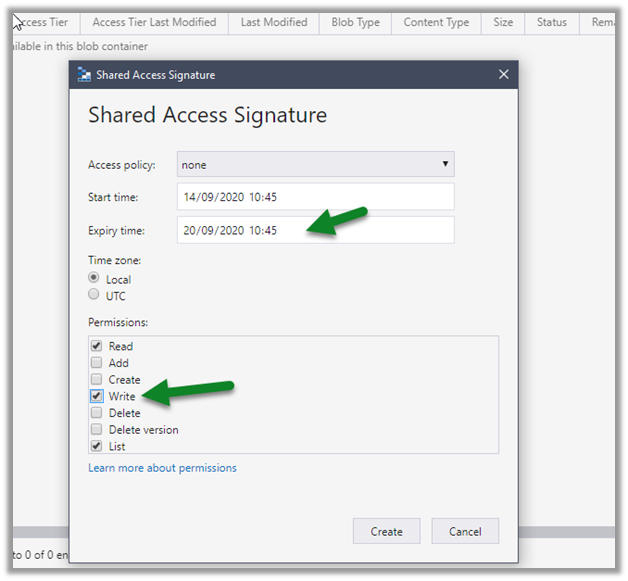

Specify the expiry time along with Write permissions, this is for logging purpose when the Azure-SSIS IR is being provisioned.

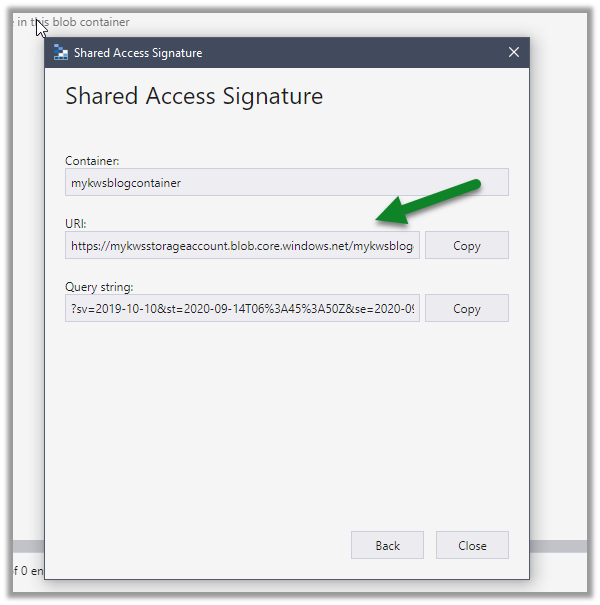

Copy the URL (it will be used in the PowerShell script later)

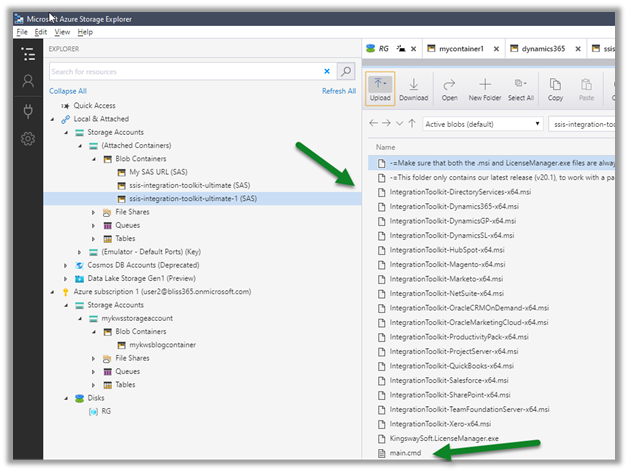

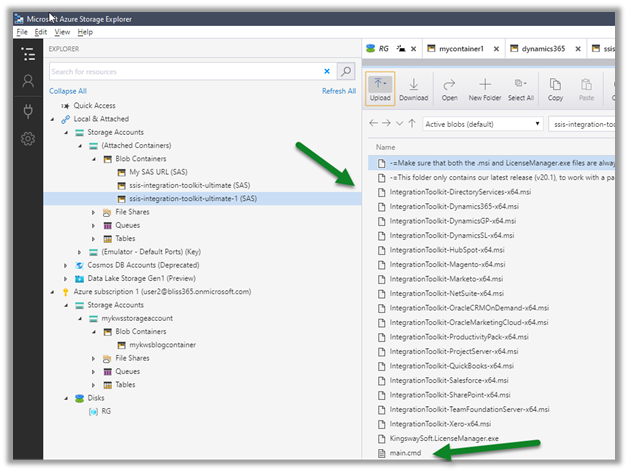

Now let us get the installation files and programs from the KingswaySoft Shared Blob Container, which we’d place in the blob container we just created.

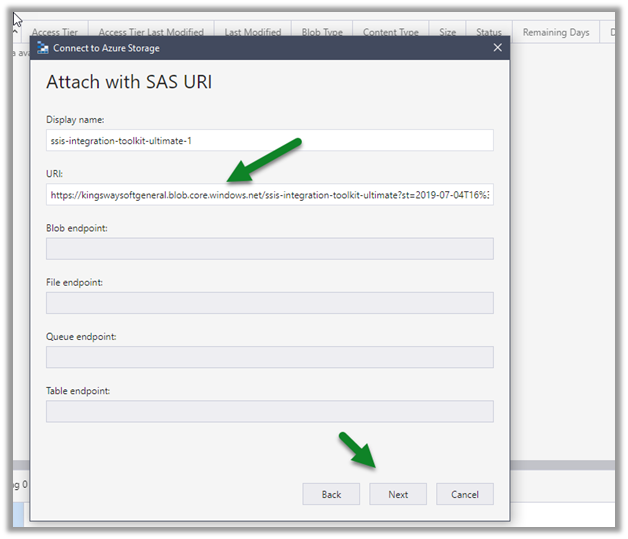

https://kingswaysoftgeneral.blob.core.windows.net/ssis-integration-toolkit-ultimate?st=2019-07-04T16%3A10%3A25Z&se=2059-07-05T16%3A10%3A00Z&sp=rl&sv=2018-03-28&sr=c&sig=LAGvouFpkZHEk%2BH8%2B0pK%2FDNg7B3jPUf%2FJ91%2BJ%2FEeKg0%3D

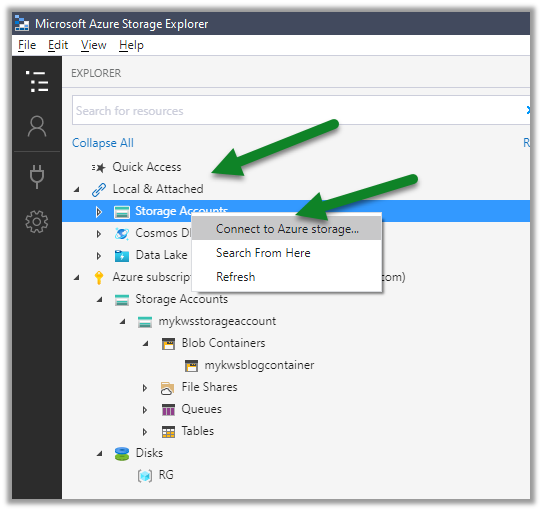

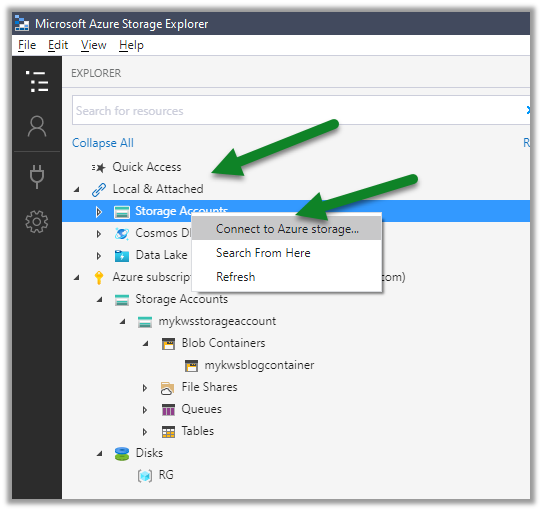

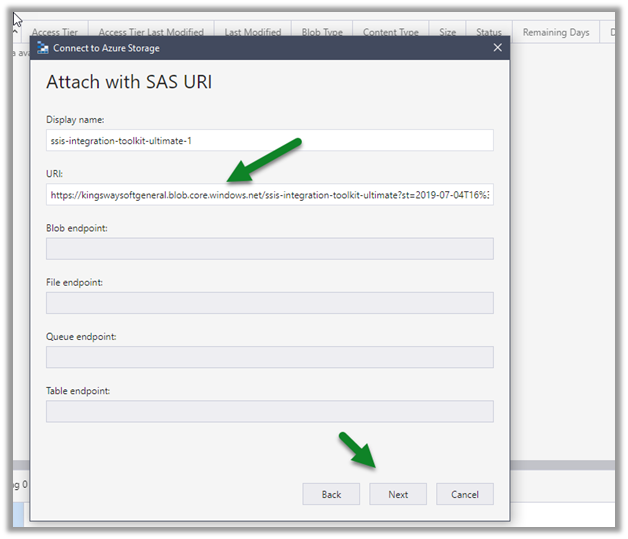

Right-click Storage Accounts and select Connect to Azure Storage

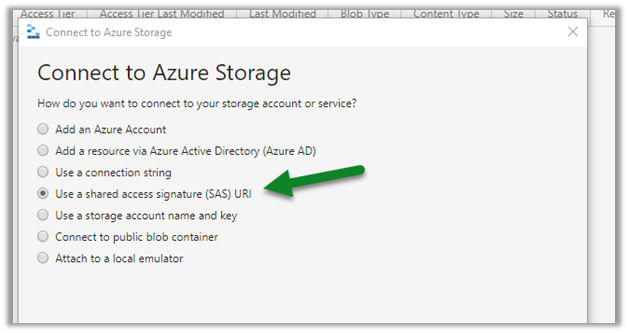

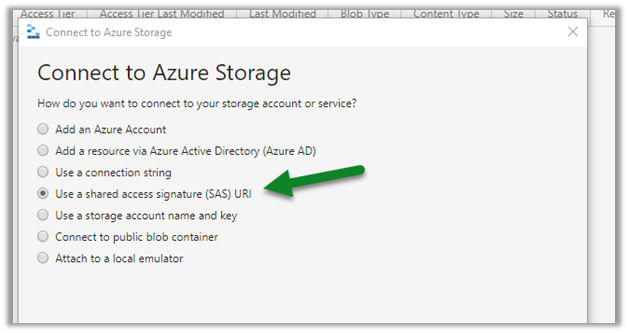

Select Use a shared access signature (SAS) URI

Paste the KingswaySoft blob container URL.

We can see the below contents added to the blob container.

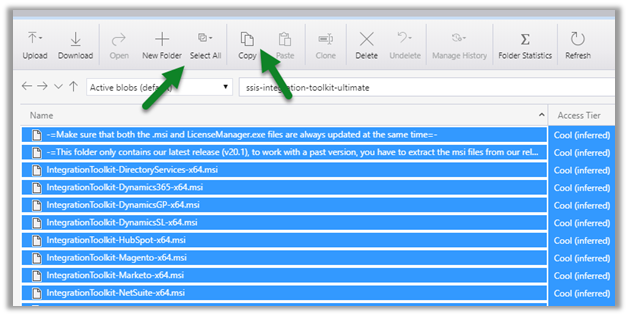

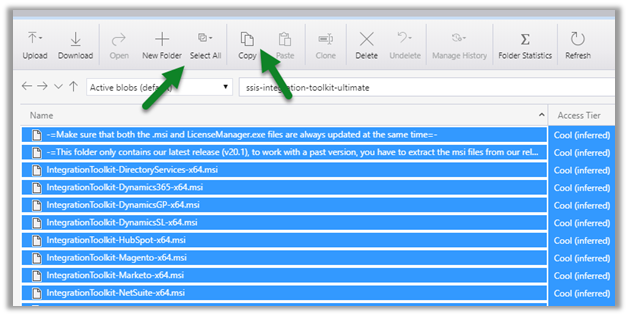

Select all and copy all the files.

Paste it in the blob container we had created earlier.

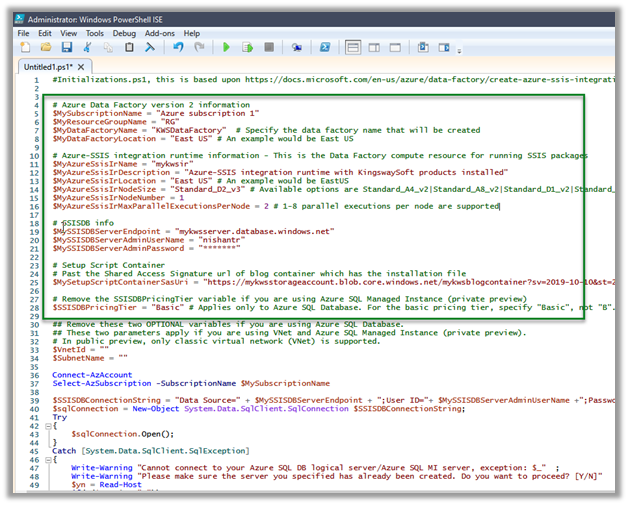

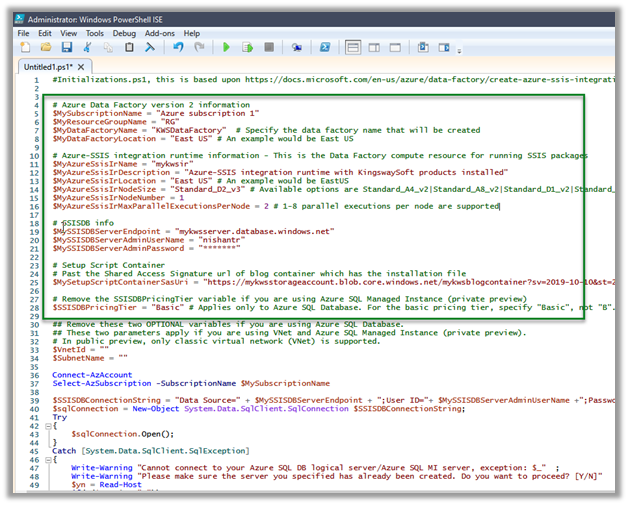

With things now setup, let us get the PowerShell script to provision the Azure-SSIS Integration Runtime Initializations.ps1 and update it.

Specify the appropriate values and run the script. Get the Azure PowerShell.

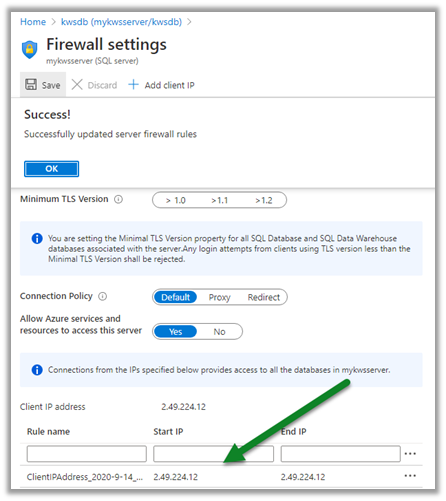

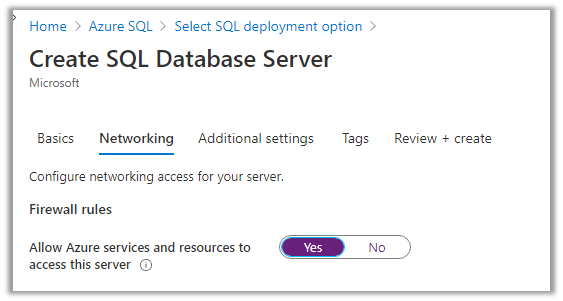

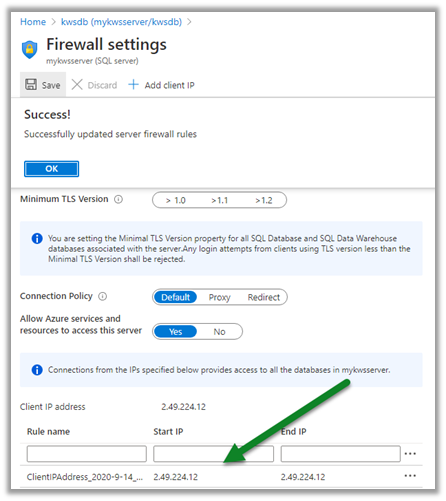

Also, make sure to update the firewall rules to allow the client to connect.

Update the PowerShell Script appropriately

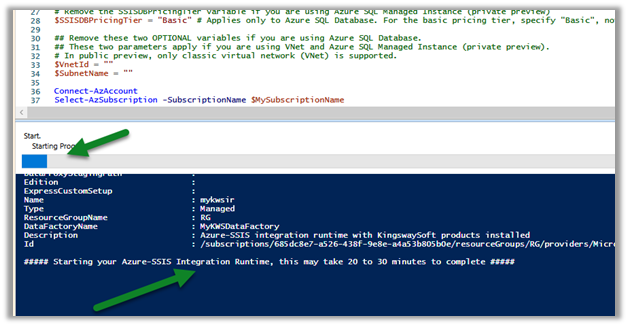

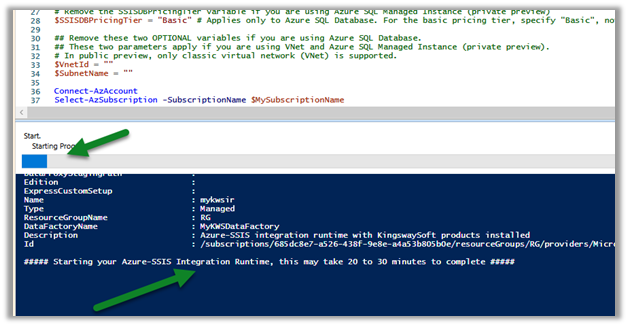

We can check the status as shown below.

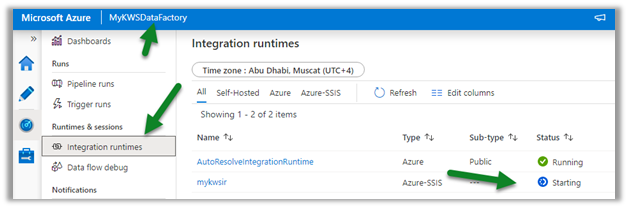

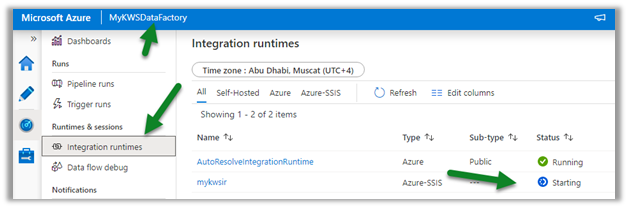

In parallel, we can see our Azure Data Factory created with the integration runtime, which is in Starting status.

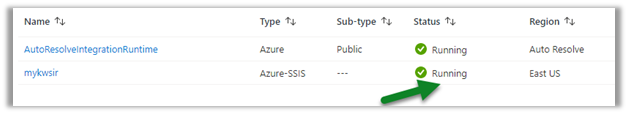

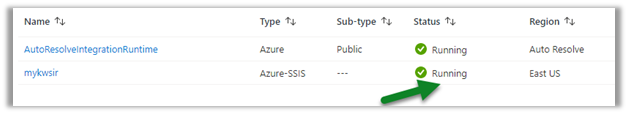

After a few minutes, we will have integration runtime up and running.

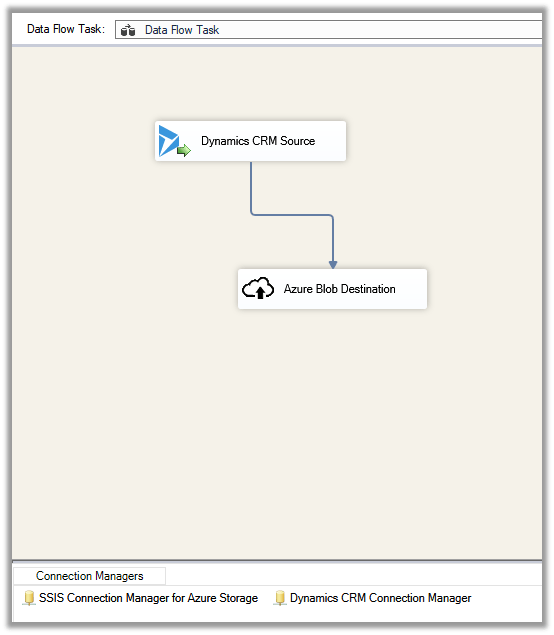

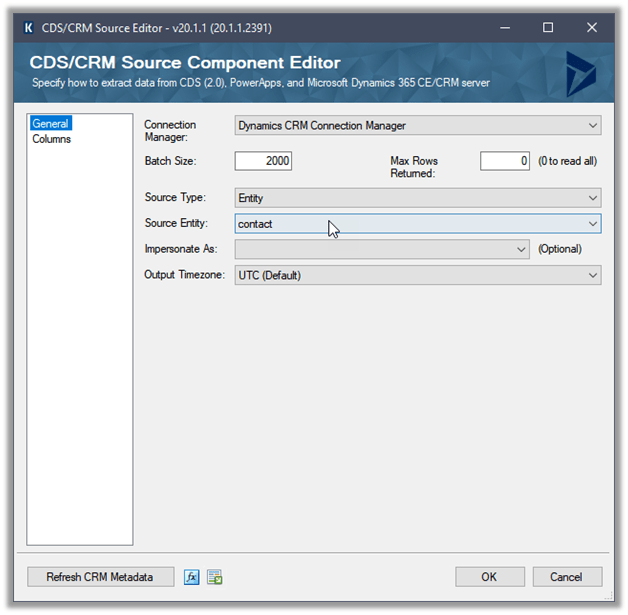

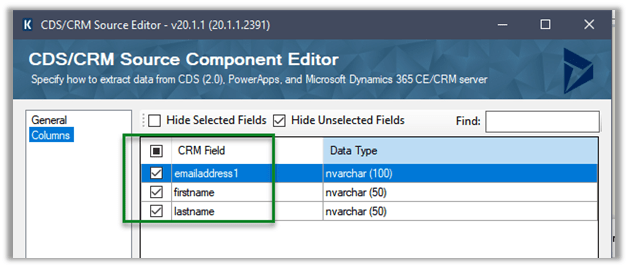

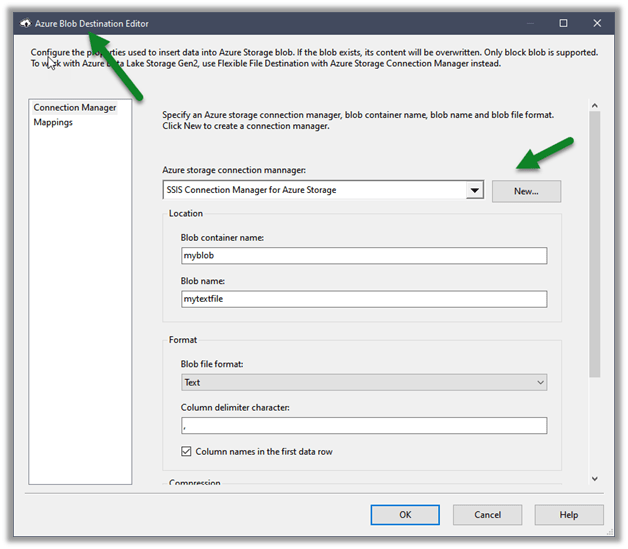

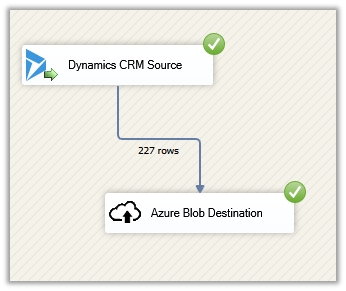

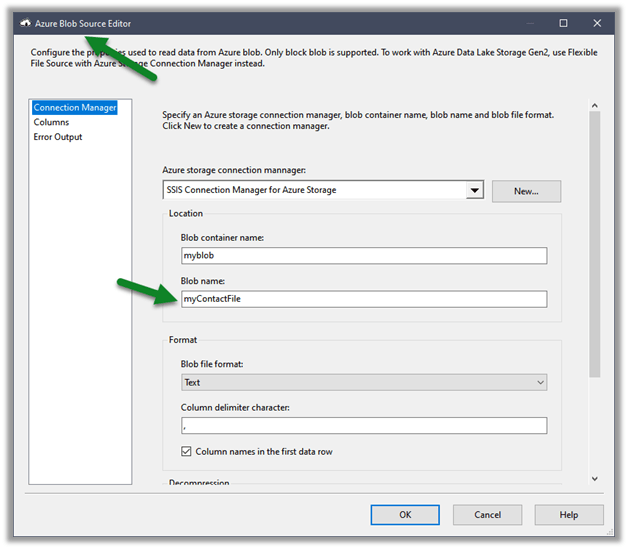

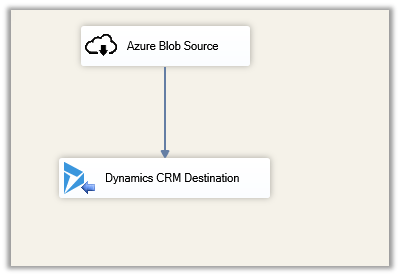

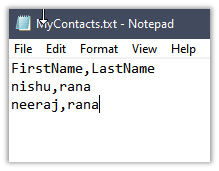

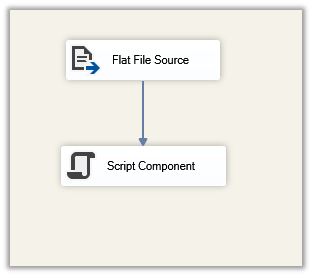

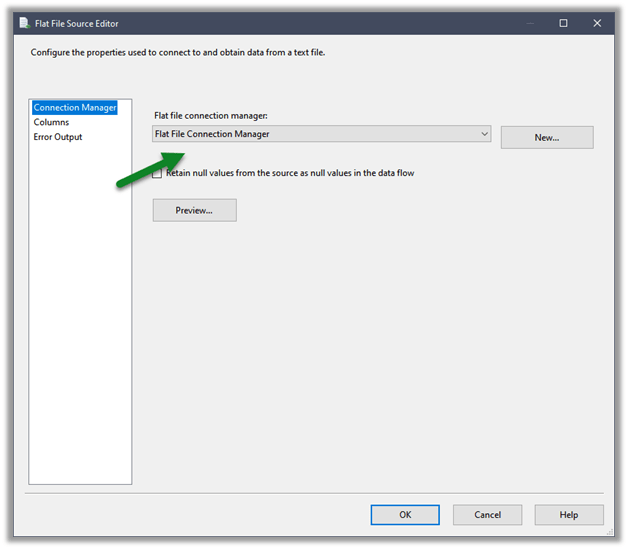

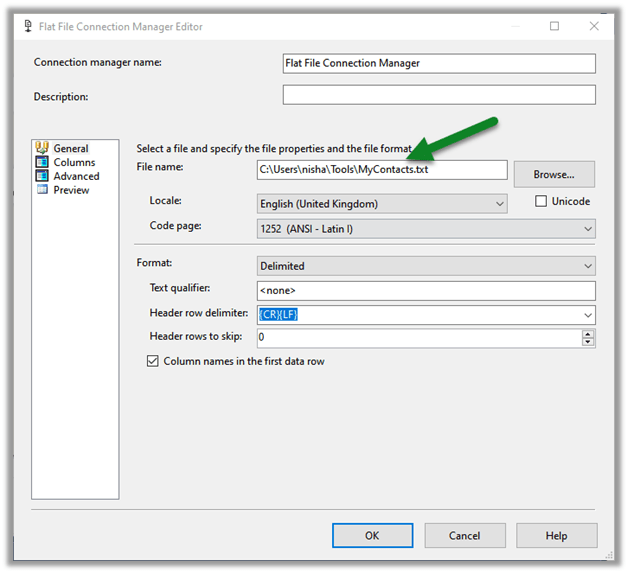

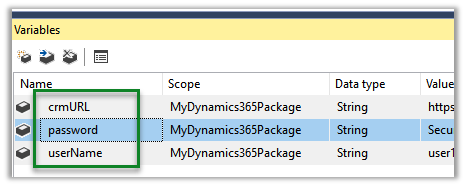

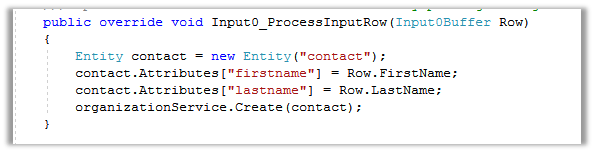

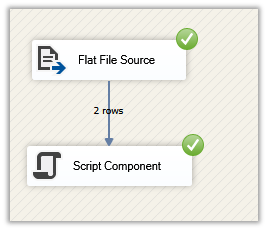

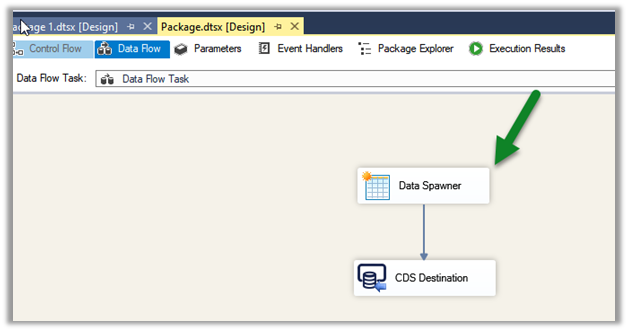

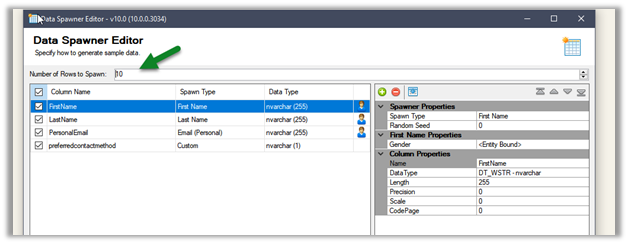

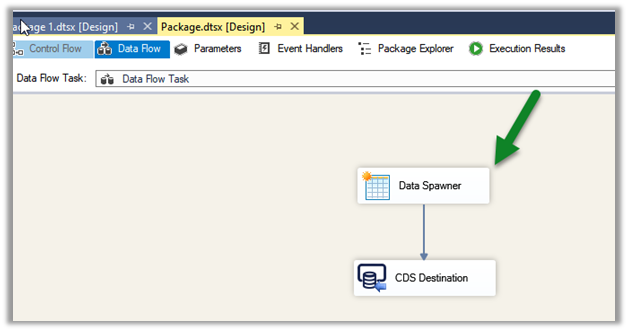

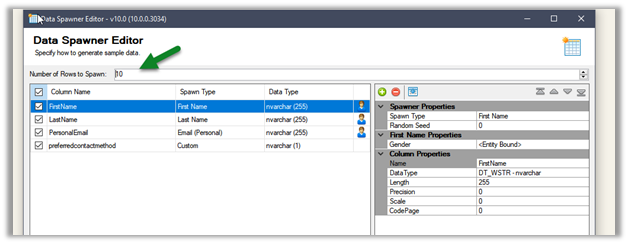

Below is our SSIS Package that we would be deploying to the cloud.

It uses Data Spawner Component to generate test data for Contacts and the CDS Destination component to create those records inside CDS.

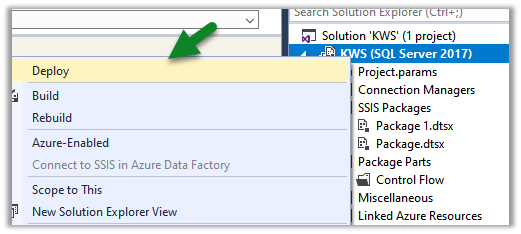

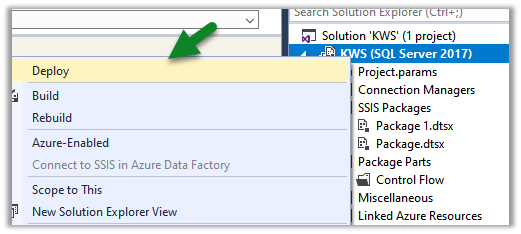

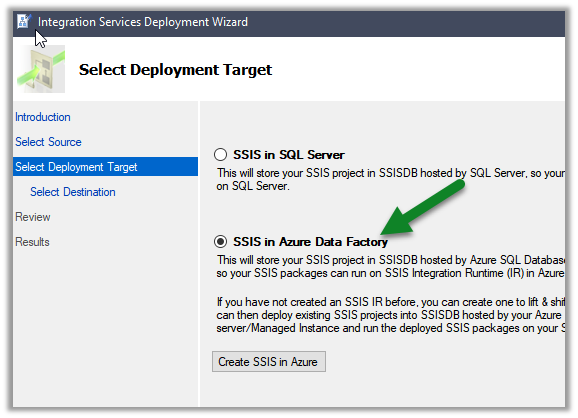

Right-click the integration project and select Deploy

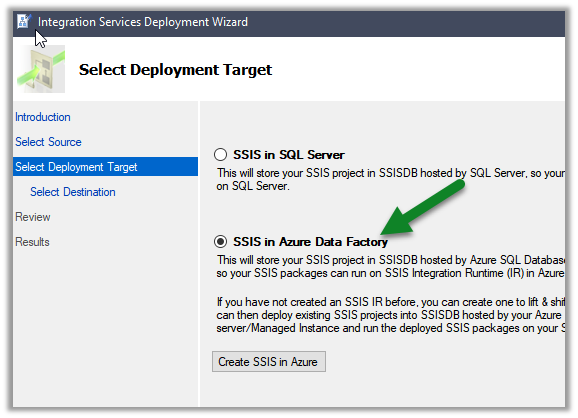

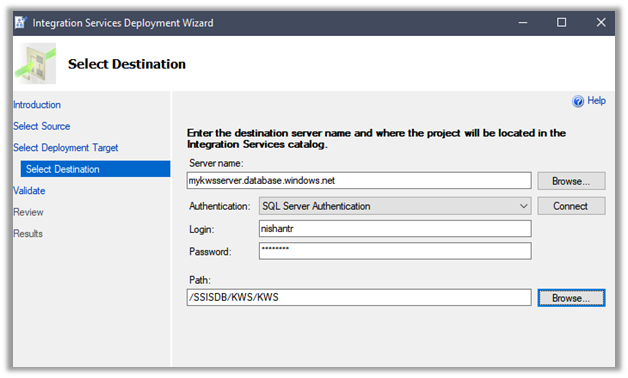

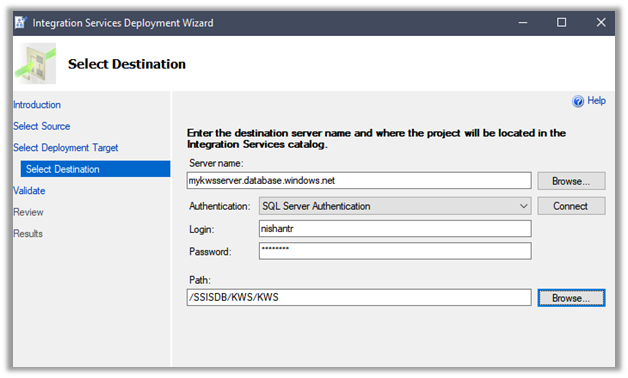

Specify connection details along with Path

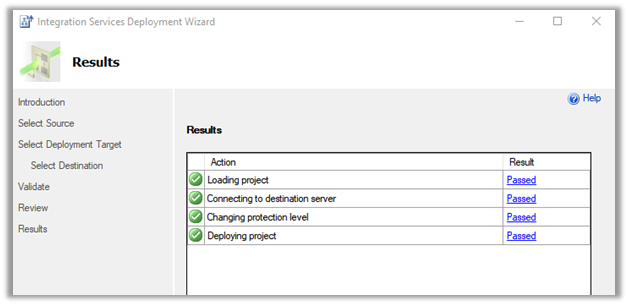

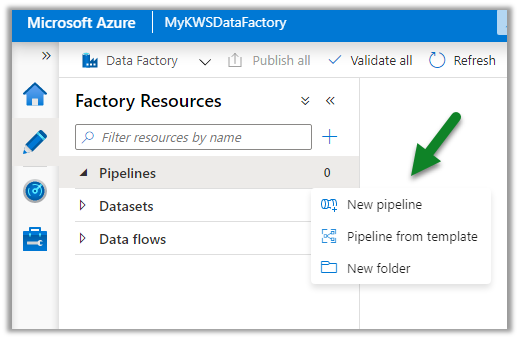

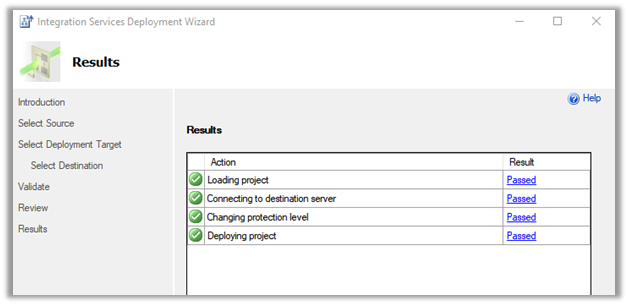

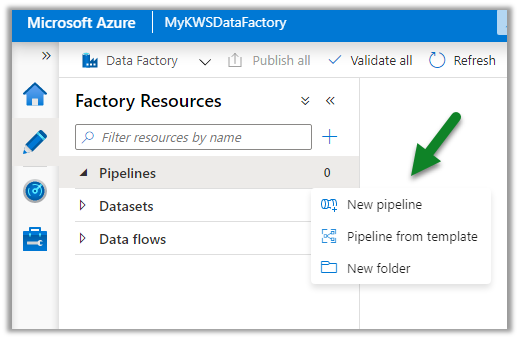

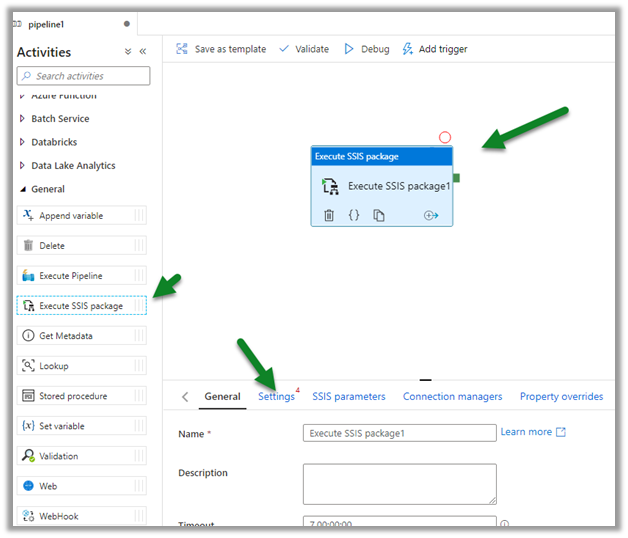

After successful deployment, let us create a new pipeline inside the Azure Data Factory.

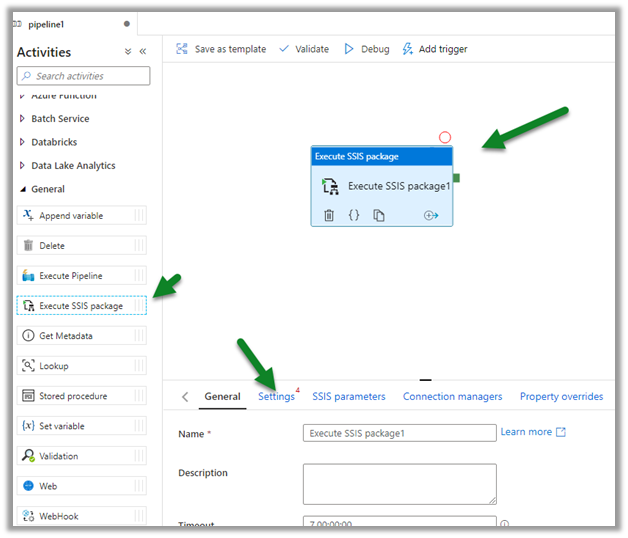

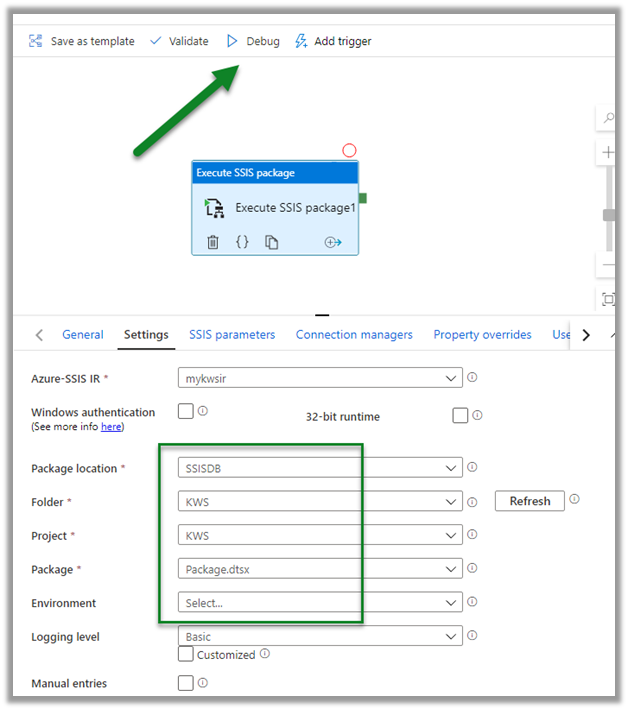

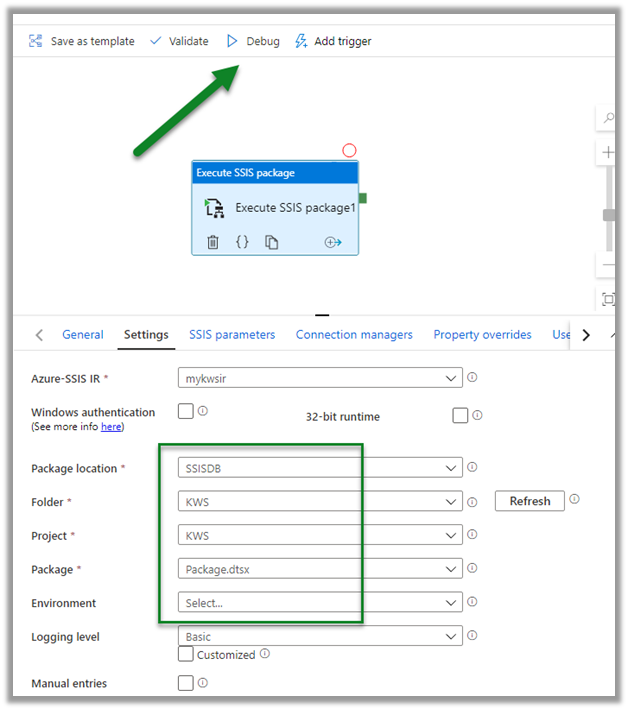

Drag and drop the Execute SSIS Package and click on the Settings tab.

Connect to the package deployed followed by Validate and Debug to test the pipeline.

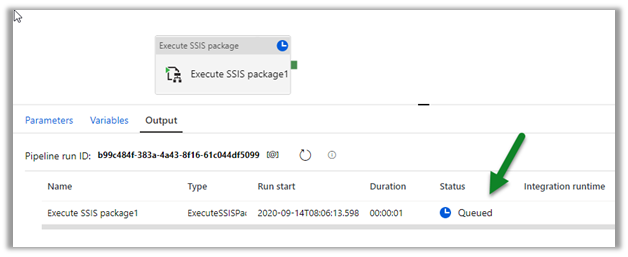

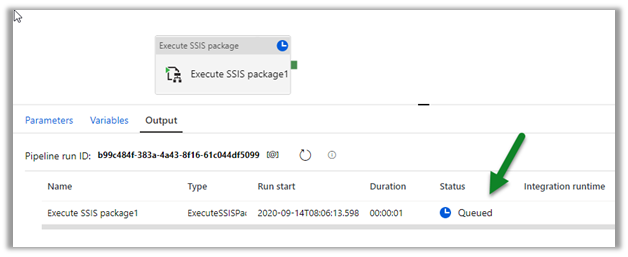

The pipeline will be in Queued status

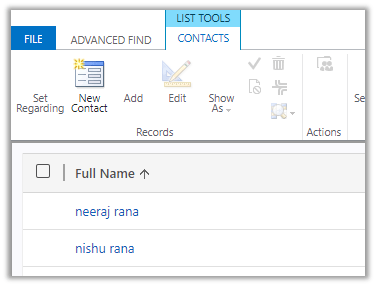

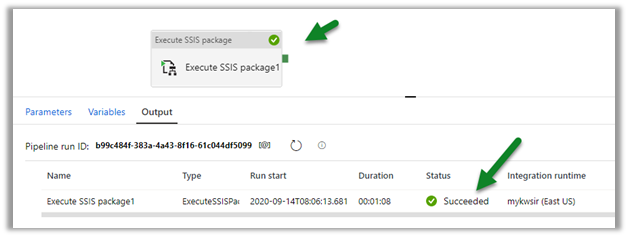

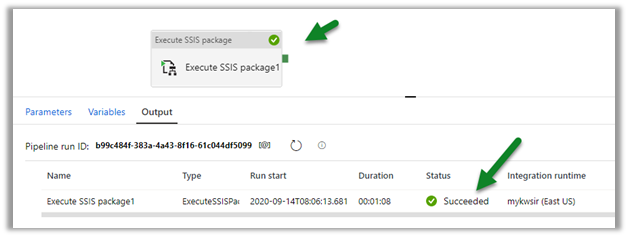

After successful execution,

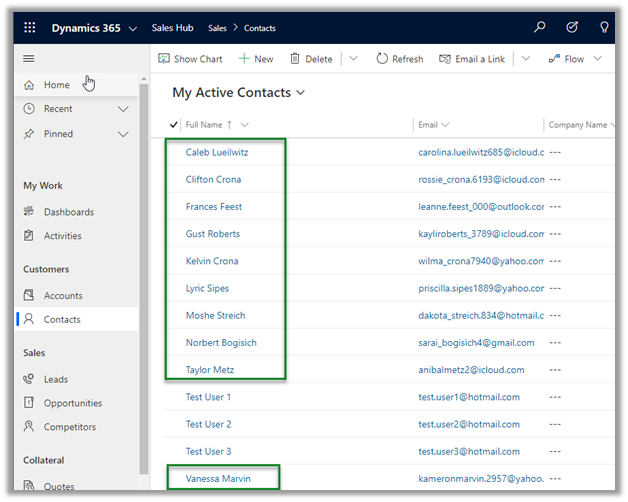

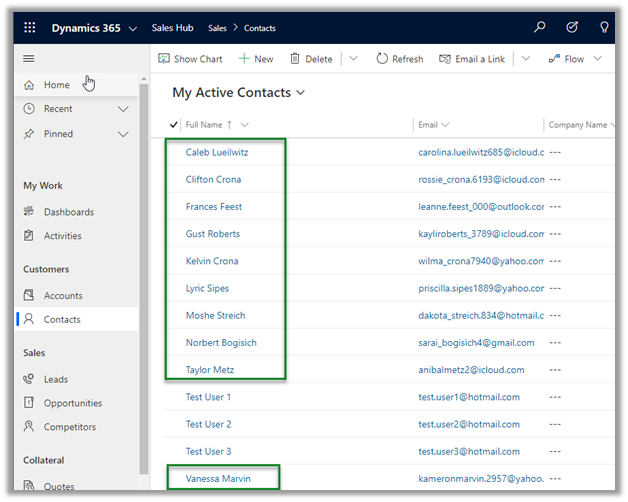

navigate to our Dynamics 365 Sales Hub

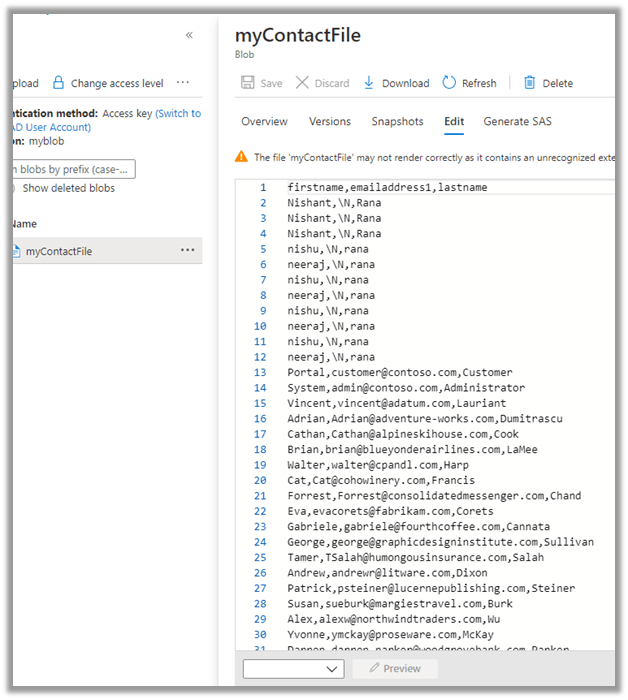

We can see 10 contact records created by the SSIS Package.

Hope it helps..